Configure a Kubernetes namespace pool for Astronomer Software

Every Deployment within your Astronomer Software installation requires an individual Kubernetes namespace. You can configure a pool of pre-created namespaces to limit Astronomer Software access to these namespaces.

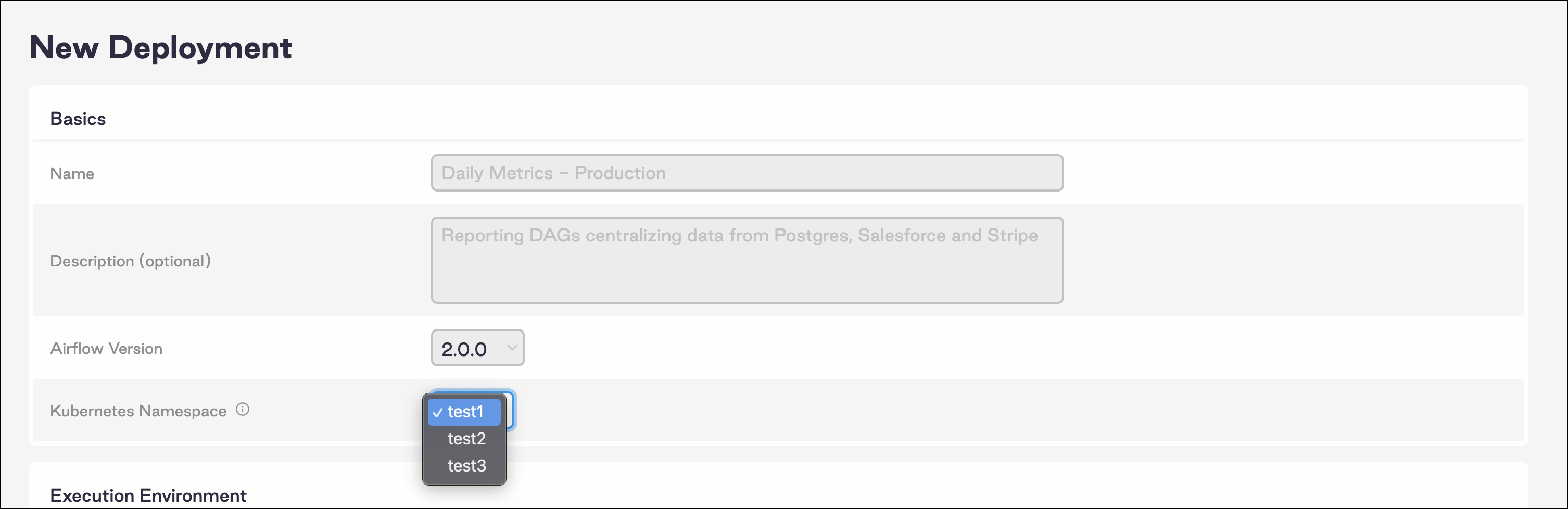

When you configure a pool of pre-created namespaces, Astronomer users are required to select a namespace from the pool whenever they create a new Deployment. Once the Deployment is created, its corresponding namespace is no longer available. If a Deployment is deleted, its namespace is returned to the pool and is available.

Benefits of pre-created namespaces

A pre-created namespace pool provides the following benefits:

- It limits the cluster-level permissions your organization needs to give to Astronomer Software. Astronomer Software requires permissions only for the individual namespaces you configure. Pre-created namespaces are recommended when your organization does not want to give Astronomer Software cluster-level permissions in a multi-tenant cluster.

- It can reduce costs and resource consumption. By default, Astronomer Software allows users to create Deployments until there is no more unreserved space in your cluster. If you use a pool, you can limit the number of active Deployments running at a time. This is especially important if you run other Elastic workloads on your Software cluster and need to prevent users from accidentally claiming your entire pool of unallocated resources.

Technical Details

When a user creates a new Deployment with the UI or CLI, Astronomer Software creates the necessary Airflow components and isolates them in a dedicated Kubernetes namespace. These Airflow components depend on Kubernetes resources, some of which are stored as secrets.

To protect your Deployment Kubernetes secrets, Astronomer uses dedicated service accounts for parts of your Deployment that need to interact with external components. To enable this interaction, Astronomer Software needs extensive cluster-level permissions for all namespaces running in a cluster.

In Kubernetes, you can grant service account permissions for an entire cluster, or you can grant permissions for existing namespaces. Astronomer uses cluster-level permissions because, by default, the amount of Deployments to manage is unknown. This level of permissions is appropriate if Astronomer Software is running in its own dedicated cluster, but can pose security risks when other applications are running in the same cluster.

For example, consider the Astronomer Commander service, which controls creating, updating, and deleting Deployments. By default, Commander has permissions to interact with secrets, roles, and service accounts for all applications in your cluster. The only way to mitigate this risk is by implementing pre-created namespaces.

Setup

To create a namespace pool you have the following options:

- Delegate the creation of each namespace, including roles and rolebindings, to the Astronomer helm chart. This option is suitable for most organizations.

- Create each namespace manually, including roles and rolebindings. This option is suitable if you need to further restrict Astronomer Kubernetes resource permissions. However, using this methodology to limit permissions can prevent Deployments from functioning as expected.

Prerequisites

Option 1: Use the Astronomer Helm chart

- Set the following configuration in your

values.yamlfile:

- Save the changes in your

values.yamlfile and update your Astronomer Software. See Apply a config change.

Based on the namespace names that you specified, Astronomer creates the necessary namespaces and Kubernetes resources. These resources have permissions scoped appropriately for most use-cases.

Once you apply your configuration, you should be able to create new Deployments using a namespace from your pre-created namespace pool. You should also be able to see the namespaces you specified inside your cluster resources.

Option 2: Manually create namespaces, roles, and rolebindings

Complete this setup if you want to further limit the namespace permissions that Astronomer provides by default.

Step 1: Configure namespaces

For every namespace you want to add to a pool, you must create a namespace, role, and rolebinding for Astronomer Software to access the namespace with. The rolebinding must be scoped to the astronomer-commander service account and the namespace you are creating.

-

In a new manifest file, add the following configuration. Replace

<your-namespace-name>with the namespace you want to add to the pool. -

Save this file and name it

commander.yaml. -

For each namespace you configured in

astronomer.houston.config.deployments.preCreatedNamespaces, runkubectl -n <airflow namespace> apply -f commander.yaml.

Step 2: Configure a namespace pool in Astronomer

-

Set the following values in your

values.yamlfile, making sure to specify all of the namespaces you created in thenamespaces.namesobject: -

Save the changes in your

values.yamland update Astronomer Software. See Apply a config change.

Creating Deployments in pre-created namespaces

After enabling the pre-created namespace pool, the namespaces you registered now appear as options when you create a new Deployment with the Software UI.

When you create Deployments with the CLI, you are prompted to select one of the available namespaces for your new Deployment.

If no namespaces are available, an error message appears when you create new Deployments in the Software UI and CLI. Delete the Deployment associated with the namespace to return the namespace to the pool.

Advanced settings

If your namespace configurations require more granularity, use the following settings in your values.yaml file.

Mixing global and advanced settings might result in unexpected behavior. If you use the standard settings, Astronomer recommends that you set global.features.namespacePools.enabled to false.

When using these settings, Astronomer recommends enabling hard deletion for Deployments.

In the following example values.yaml file, these settings are configured so that you don’t configure namespace pools at a global level:

Troubleshooting namespace pools

My Deployment is in an unknown state

If a Deployment isn’t active, check the Commander pods to confirm the Deployment commands have successfully executed. When using a pre-created namespace pool with scoped roles, it’s possible that the astronomer-commander service account doesn’t have the permissions necessary to perform a required action. When Commander is successful, the following notification appears:

When Commander is unsuccessful, messages similar to the following appear:

This error shows that the service accounts couldn’t be created in the pre-created namespaces and the roles need to be updated.

My namespace is not returning to the pool

If you’re not using hard deletion, it can take several days for pre-created namespaces to become available after the associated Deployment is deleted. To enable hard deletes, see Delete a Deployment.

My Deployments using NFS deploys stopped working

NFS deploys don’t work if you both use namespace pools and set global.clusterRoles to false in your values.yaml file.