Apache Airflow Resilience: Guide to Running Highly Available Data Pipelines

In this article, we will explore the key features of Apache Airflow that contribute to its reliability, as well as best practices for ensuring that your Airflow workflows are robust and dependable.

Apache Airflow® is a powerful open-source platform designed to programmatically author, schedule, and monitor workflows. It has become an essential tool for data teams, including data engineers, ML engineers, data analysts, and developers, enabling them to create complex data pipelines that automate and streamline data processing tasks.

In the realm of data operations (DataOps), reliability is paramount. Ensuring that data pipelines run smoothly, handle failures gracefully, and scale efficiently is crucial for maintaining data integrity and operational efficiency.

In this article, we will explore the key features of Apache Airflow that contribute to its reliability, as well as best practices for ensuring that your Airflow workflows are robust and dependable. Whether you are a data engineer managing ETL processes, an ML engineer deploying models, a data analyst performing real-time analytics, or a developer integrating multiple data sources, this guide will provide you with valuable insights and practical tips to enhance the reliability of your data pipelines.

Looking for a managed Airflow service? Try Astro for Free

The Impact of Airflow Failures and Downtime on Data Pipelines

While Apache Airflow is a powerful tool for managing data workflows, Airflow failures can have significant consequences for both data operations and the broader business. Understanding these impacts is crucial for data teams to prioritize reliability and take proactive measures to mitigate risks.

Operational Consequences of Data Pipeline Failures

From an operational perspective, failures in Airflow can disrupt the smooth functioning of data pipelines, leading to several challenges:

- Data Integrity Issues: Failed tasks can result in incomplete or corrupted data, compromising the accuracy and reliability of downstream analytics, reporting and applications.

- Delayed Insights: Delays in data processing can slow down the delivery of critical insights, affecting decision-making processes and operational efficiency.

- Increased Manual Overhead: Airflow failures often require manual intervention to diagnose and resolve issues, consuming valuable time and resources that could be better spent on other tasks.

- Resource Contention: Failed workflows can lead to resource contention and bottlenecks, impacting the performance of other tasks and systems.

Business Implications of Data Workflow Downtime

Beyond operational challenges, Airflow failures can have broader business implications:

- Loss of Revenue: Delays in data processing can lead to missed opportunities, such as delayed marketing campaigns or ineffective customer targeting, resulting in lost revenue.

- Reduced Customer Satisfaction: Inaccurate or delayed data can impact customer-facing applications and services, leading to reduced customer satisfaction and potential churn.

- Compliance Risks: Failures in data pipelines can result in non-compliance with regulatory reporting requirements, leading to legal and financial penalties.

- Reputational Damage: Consistent failures in data operations can erode trust and damage the organization’s reputation, affecting partnerships and future business opportunities.

By understanding the operational and business impacts of Airflow failures, data teams can better appreciate the importance of reliability and take proactive steps to ensure the robustness of their data pipelines. In the next section, we will explore the key features of Apache Airflow that contribute to its reliability.

Key Airflow Features That Enhance Data Pipeline Reliability and Uptime

Here’s are the Apache Airflow features you should use to build robust and resilient data workflows:

- Unified Workflow Orchestration

Airflow provides a centralized platform for orchestrating complex data pipelines involving multiple teams and technologies.

- Cross-Team Collaboration: Enable data engineers, analysts, and ML engineers to work together seamlessly on interconnected tasks within a pipeline.

- Modular DAGs: Break down pipelines into modular tasks (DAGs) that can be independently developed, tested, and maintained.

- Cross-Team Collaboration: Enable data engineers, analysts, and ML engineers to work together seamlessly on interconnected tasks within a pipeline.

- Scalable and Resilient Architecture

Designed for scalability, Airflow ensures that data pipelines can handle increasing volumes and complexity without compromising reliability.

- Distributed Execution: Use executors like CeleryExecutor or KubernetesExecutor to distribute tasks across multiple workers, enhancing fault tolerance.

- High Availability: Implement redundant schedulers and workers to eliminate single points of failure, ensuring continuous pipeline operation with Airflow high availability.

- Remote Execution for resilient, distributed workloads:

Airflow 3 introduces Remote Execution, giving teams the ability to run tasks in compute environments that are physically or logically closer to their data. By decoupling orchestration from execution, pipelines can continue running even if a single remote worker becomes unavailable.

For organizations working with distributed or sensitive datasets, this means execution can happen in private clouds, VPC‑isolated clusters, or on‑prem systems without disrupting the central scheduler or metadata database. Workflows remain orchestrated from a single Airflow deployment, but execution is flexible, redundant, and tuned for the needs of each workload.

Combined with other Airflow features like event-driven and data-aware scheduling, DAG versioning, dynamic task mapping, and deferrable operators, Remote Execution helps teams minimize downtime, reduce latency, and design pipelines that adapt to changing infrastructure conditions. - Advanced Scheduling and Dependency Management:

Precise scheduling and dependency handling are key to reliable data pipelines, and Airflow excels in this area.

- Flexible Scheduling: Schedule tasks based on time intervals, external events, or complex conditions, catering to the needs of data analysts and ML workflows.

- Dependency Graphs: Define clear dependencies between tasks to ensure they execute in the correct order, maintaining data integrity throughout the pipeline.

- Extensive Integration Capabilities

The rich ecosystem of Airflow operators and custom hooks allows seamless integration with various data sources, tools, and services used across data teams.

- Pre-built Operators: Leverage a vast library of operators for databases, APIs, cloud services, and big data platforms.

- Custom Plugins: Develop custom operators and hooks to meet specific requirements, enhancing reliability through tailored solutions.

- Robust Logging and Alerting

Effective Airflow logging tools keep data teams informed about pipeline health, enabling prompt responses to issues.

- Real-Time Monitoring: Use the intuitive Web UI and CLI to track task statuses, view logs, and manage workflows in real-time.

- Alerts and Notifications: Configure email or messaging alerts for task failures, successes, or retries to keep all stakeholders informed.

- Error Handling and Retry Mechanisms

Airflow’s sophisticated error handling ensures transient issues don’t derail entire pipelines.

- Automatic Retries: Define Airflow retry logic with customizable intervals and limits, reducing the need for manual intervention.

- Failure Callbacks: Implement custom Airflow actions upon task failure, such as triggering alternative workflows or cleaning up resources.

- Testability and Local Development

Testing pipelines before deployment is crucial for reliability, and Airflow supports this with ease.

- Unit and Integration Testing: Write tests for individual tasks and entire DAGs to validate functionality and performance.

- Local Debugging: Execute tasks locally to debug and ensure they work as expected before deploying to production environments.

- Community Support and Continuous Improvement

Benefit from a vibrant community that contributes to Airflow’s reliability and feature enhancements.

- Regular Updates: Stay up-to-date with the latest features and security patches.

- Community Contributions: Utilize community-developed plugins and best practices to improve pipeline reliability.

Apache Airflow’s flexibility and power make it an indispensable tool for any team aiming to enhance the reliability of their data pipelines. By facilitating collaboration and providing robust features, Airflow helps organizations unlock the full potential of their data assets.

Best Practices for Achieving High Availability and Resilience in Apache Airflow Deployments

Adopting the following Airflow best practices can help safeguard your workflows and keep your data moving smoothly.

- Implement Redundant Scheduler and Webserver Instances

- High Availability Schedulers: Run multiple scheduler instances to prevent a single point of failure. Airflow 2.0 and above support active-active schedulers, allowing workload sharing and improved fault tolerance.

- Redundant Webservers: Deploy multiple webserver instances behind a load balancer to ensure continuous access to the Airflow UI, even if one instance fails.

- Use a Reliable Metadata Database

- Database Replication: Employ robust databases like PostgreSQL or MySQL with replication and failover capabilities to store Airflow’s metadata securely.

- Leverage Distributed Executors

- Celery Executor: Utilize the Celery Executor with a message broker like RabbitMQ or Redis to distribute tasks across multiple worker nodes, enhancing scalability and resilience.

- Kubernetes Executor: Deploy Airflow on Kubernetes to take advantage of container orchestration, auto-scaling, and self-healing features.

- Design Idempotent and Fault-Tolerant DAGs

- Expertise on-tap: Keep up to date with the latest DAG writing best practices.

- Idempotent Tasks: Ensure tasks can run multiple times without adverse effects, which is crucial for retries and backfills.

- Clear Dependency Management: Define explicit dependencies to prevent cascading failures and ensure tasks execute in the correct order.

- Resource Optimization: Set appropriate resource limits and requests for tasks to prevent overloading workers.

- Configure Robust Retry Policies

- Automatic Retries: Define retries for tasks with appropriate delays to handle transient failures without manual intervention.

- Exponential Backoff: Implement exponential backoff strategies to avoid overwhelming systems during outages.

- Implement Alerting

- Set Up Alerts: Configure alerts for critical events like task failures, delayed DAG runs, or resource exhaustion to enable prompt response.

- Perform Thorough Testing

- Unit and Integration Tests: Write tests for your DAGs and tasks to catch issues early in the development cycle.

- Staging Environment: Use a staging environment that mirrors production to test changes before deployment.

- Stay Updated with the Latest Releases

- Apply Patches Promptly: Keep Airflow and its dependencies up to date to benefit from the latest features, performance improvements, and security patches.

- Monitor Release Notes: Stay informed about deprecations or breaking changes in new versions.

- Document Processes and Configurations

- Maintain Up-to-Date Documentation: Keep records of your Airflow setup, configurations, and operational procedures to facilitate knowledge transfer and troubleshooting.

- Onboarding Guides: Develop onboarding materials for new team members to ensure consistency in how Airflow is used and maintained.

- Engage with the Community

- Participate in Forums: Engage with the Apache Airflow community through mailing lists, forums, or Slack channels to stay informed about best practices and common issues.

- Contribute: Consider contributing to the project or sharing your own best practices to help improve the tool for everyone.

By implementing these best practices, data teams can significantly enhance the availability and resilience of their Airflow deployments. A robust and fault-tolerant Airflow environment ensures that data pipelines remain reliable, allowing your organization to make timely, data-driven decisions without interruption.

Enhancing Airflow Resilience and Uptime with Astro Managed Service

While Apache Airflow stands as a robust platform for orchestrating complex data pipelines, managing and scaling it to achieve high availability and resilience can be both resource-intensive and complex. This is where Astro, the managed service by Astronomer, extends the capabilities of Apache Airflow to offer a more streamlined, reliable, and efficient solution. Astro builds upon the strengths of Apache Airflow, providing additional features that simplify deployment, enhance reliability, and reduce the operational burden on data teams.

Astro offers a fully managed infrastructure that significantly simplifies deployment:

- Instead of spending valuable time setting up and maintaining Airflow environments manually, data teams can deploy optimized Airflow instances with just a few clicks. This not only accelerates the time to value but also ensures that the infrastructure is optimized for performance and reliability from the outset.

- TheAstro service automatically scales resources up or down based on workload demands, ensuring consistent performance without the need for manual intervention.

- Astro’s underlying infrastructure isdesigned with redundancy in mind, minimizing downtime and protecting against hardware or network failures.

Data observability is an important technique that gives businesses insight into the health of their data pipelines. Astronomer unifies orchestration alongside observability, enhances data quality, and makes your operations more transparent, reliable, and efficient.

Astro Observe delivers pipeline-level data observability, providing a comprehensive view of your Airflow environment; with tracking across the health and performance of data products to ensure they meet business goals. The SLA dashboard lets teams set data freshness and delivery thresholds, and provides real-time alerts when issues arise. With full lineage tracking, teams can quickly pinpoint activity and ownership across even the most complex workflows, ensuring swift remediation when needed.

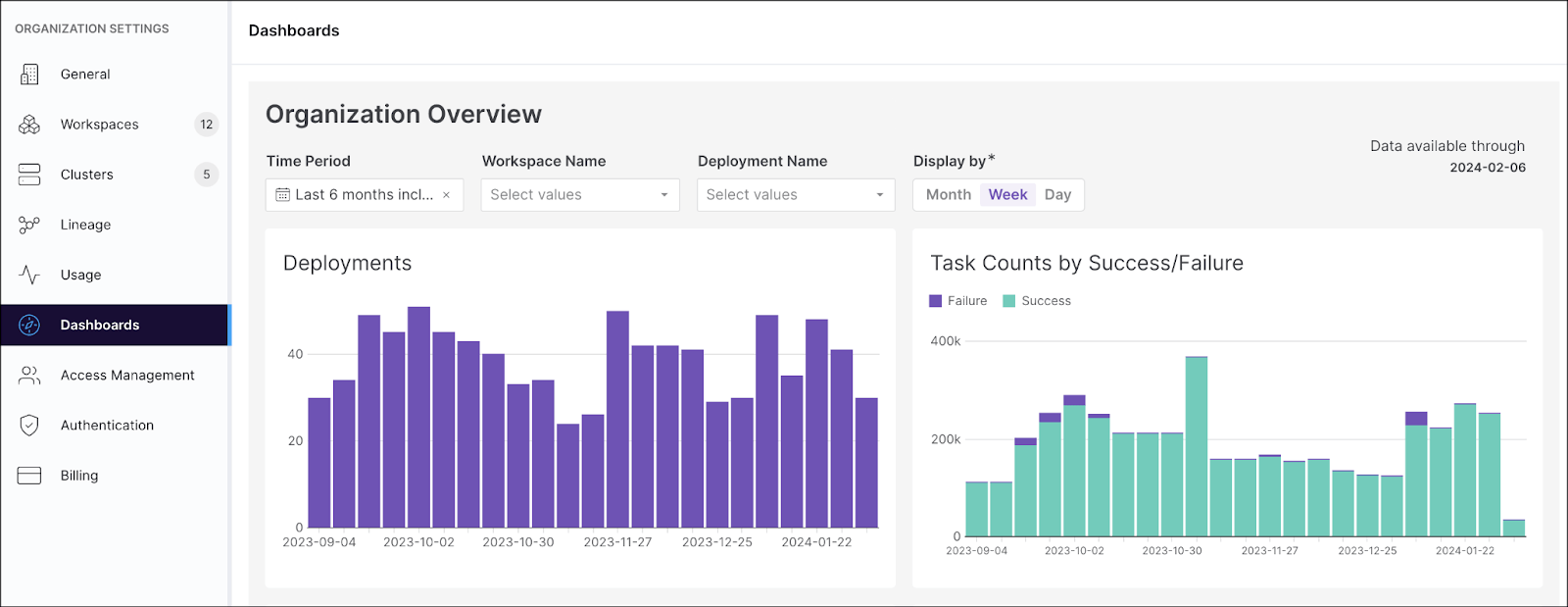

Monitoring and alerting are critical components for maintaining the health of data pipelines. Astro enhances these capabilities by offeringintegrated monitoring dashboards that provide real-time insights into the health and performance of Airflow environments.

Figure 1: Astro dashboards provide at-a-glance summaries about activity across Airflow deployments in your organization.

Data teams can configure custom alerts for critical events, such as task failures or resource constraints, enabling proactive issue resolution. Additionally, Astro provides centralized logging, which simplifies troubleshooting and auditing processes by consolidating logs for all workflows.

Another significant advantage of Astro is its seamless handling of upgrades and patching. The service manages Airflow version upgrades and security patches, ensuring that environments remain up-to-date without disrupting ongoing workflows.

Astro also bolsters disaster recovery and backup processes. It performs automated backups of metadata and configurations, safeguarding against data loss. In the event of regional outages, Astro can failover workloads to other regions, enhancing resilience and ensuring business continuity.

Ensuring security and compliance are paramount to reliability, and Astro addresses these concerns with enterprise-grade security features. It provides advanced security measures such as single sign-on (SSO), role-based access control (RBAC), and encryption of data both in transit and at rest. For organizations handling sensitive data, Astro’s compliance with industry standards like SOC 2, GDPR, and HIPAA offers an added layer of assurance.

ExpertAirflow support and professional services are integral parts of Astro’s offering. Data teams have access to 24/7 support from Airflow experts who can assist with troubleshooting, best practices, and performance tuning. The service also provides onboarding assistance and training resources to help teams get up to speed quickly.

Importantly, Astro maintains compliance with open-source standards. It is fully compatible with Apache Airflow, ensuring that workflows are portable and vendor-neutral. Astronomer is the largest contributor to the Apache Airflow project, helping to drive its evolution and stability, which benefits the broader community.

Why select Astro over running on Airflow?

Choosing Astro over a self-managed Apache Airflow setup offers numerous advantages. It reduces operational overhead by offloading infrastructure management tasks, allowing data teams to focus on developing and optimizing data pipelines rather than handling maintenance and scaling issues. This shift not only accelerates project timelines but also improves reliability and uptime, as Astro’s high-availability architecture and proactive monitoring help achieve greater pipeline reliability.

Cost efficiency is another benefit, as resource utilization is optimized with automatic scaling, potentially lowering operational costs compared to over-provisioned self-managed clusters. Scalability is seamless, enabling organizations to handle growing workloads and data volumes without worrying about underlying infrastructure limitations.

Astro enhances various use cases, from orchestrating complex data pipelines that require high reliability to managing machine learning operations (MLOps) where models need to be trained and deployed consistently and reliably. It also supports multi-team collaboration, allowing multiple teams to work on different projects within the same platform, each with their own isolated environments.

For organizations seeking to enhance the resilience and high availability of their data pipelines, Astro offers a compelling solution. By extending the capabilities of Apache Airflow with managed infrastructure, advanced features, and expert support, Astro enables data teams to deliver reliable and scalable data workflows with less effort and greater confidence.

Customers Deploying Highly Available Data Pipelines on Airflow and Astronomer

Here are just a few of examples of data teams orchestrating mission critical data workflows and pipelines:

- Ford Motor Company’s Advanced Driver Assistance Systems team processes over 1 petabyte of lidar, radar, video, and geospatial data weekly and manages 300+ concurrent workflows. With Airflow and Astro, the team has significantly reduced workflow errors while improving reliability and scalability to accelerate AI model development. Learn more from the Astronomer Data Flowcast.

- Northern Trust has $1.5 trillion of assets under management and has provided 135 years of service to its clients. Data and workflow orchestration are critical for the internal financial warehouse, which holds vital data for balance sheets, income statements, and other financial reports. This data must be accurate and timely, underscoring the importance of reliable orchestration tools. That’s why the institution turned to Airflow and Astronomer as it modernized its systems.

- Autodesk, a global software leader, sought to enhance its position by adopting DataOps methodologies and integrating ML into business processes. However, legacy scheduling software hindered these initiatives due to its lack of support for modern cloud services and ML frameworks, as well as reliability issues. Autodesk’s platform team turned to Airflow, powered by Astronomer, for a fully-managed service to orchestrate data pipelines across cloud-native and on-premise infrastructure. With Astronomer consultants, over 500 critical data pipelines were migrated to Airflow in just 12 weeks. This shift has allowed engineers to focus on developing new data products and services rather than fixing workflow failures. Read more in the case study.

Getting Started with Airflow

Astro is a fully managed modern data orchestration platform powered by Apache Airflow. Astro augments Airflow with enterprise-grade features to enhance developer productivity, optimize operational efficiency at scale, meet production environment requirements, and more.

Astro enables companies to place Airflow at the core of their data operations, ensuring the reliable delivery of mission-critical data pipelines and products across every use case and industry.

Check out our interactive demo to learn more about Astro.

Frequently Asked Questions about Apache Airflow Resilience

Why is high availability so important for Airflow?

High availability is crucial for Airflow to ensure that data pipelines run smoothly and reliably. Downtime in Airflow can lead to data integrity issues, delayed insights, increased manual intervention, and resource contention. From a business perspective, failures can result in lost revenue, reduced customer satisfaction, compliance risks, and reputational damage. Ensuring high availability minimizes these risks and maintains operational efficiency.

What are the key features provided by Airflow that minimize downtime?

Airflow offers several key features that minimize downtime:

Scheduling and Retries: Advanced scheduling capabilities and built-in retry mechanisms ensure that tasks run on time and transient issues do not disrupt the entire workflow.

Monitoring and Alerts: Comprehensive monitoring features and integration with alerting systems enable real-time tracking and prompt issue resolution.

Fault Tolerance: Airflow's DAG structure and error handling logic ensure that workflows can recover from failures without manual intervention.

Scalability: Airflow's distributed execution and support for various executors allow it to handle increasing volumes of data and complex workflows without compromising performance.

What are some of the best practices for implementing Airflow high availability?

Implementing high availability in Airflow involves several best practices:

Redundant Scheduler and Webserver Instances: Run multiple scheduler and webserver instances to prevent single points of failure.

Reliable Metadata Database: Use robust databases with replication and failover capabilities to store Airflow's metadata securely.

Distributed Executors: Utilize executors like CeleryExecutor or KubernetesExecutor to distribute tasks across multiple worker nodes.

Idempotent and Fault-Tolerant DAGs: Design DAGs with idempotent tasks and clear dependency management to prevent cascading failures.

Robust Retry Policies: Define automatic retries with appropriate delays and exponential backoff strategies.

Alerting and Monitoring: Configure alerts for critical events and perform thorough testing in staging environments.

How does the Astro managed service for Airflow improve uptime and resilience?

The Astro managed service by Astronomer enhances Airflow's uptime and resilience by providing:

Simplified Deployment: Automated deployment and scaling of optimized Airflow instances.

Data Observability: Comprehensive insights into the health and performance of data pipelines.

Integrated Monitoring and Alerting: Real-time dashboards and custom alerts for proactive issue resolution.

Seamless Upgrades and Patching: Automated upgrades and security patches without disrupting ongoing workflows.

Disaster Recovery and Backup: Automated backups and failover processes to safeguard against data loss.

Enterprise-Grade Security: Advanced security measures and compliance with industry standards.

Expert Support: 24/7 support from Airflow experts for troubleshooting and best practices.

What is the best way to get started with Airflow?

Astro is the best way to get started with Airflow. You can try Astro for free here.