Simplify Your ETL and ELT Processes with Astronomer

Transform your data integration workflows with Astronomer, built on Apache Airflow, for managing ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) pipelines. Unlock the full potential of your data and drive insightful business decisions with ease.

What is ETL/ELT?

ETL (Extract, Transform, Load) is a data integration process where data is extracted from various sources, transformed into a desired format or structure, and then loaded into a centralized repository

ELT, which stands for Extract, Load, Transform, is a data integration process where raw data is extracted from various sources, loaded into a centralized storage system, and then transformed within that system

Key Features of ETL and ELT

on Astronomer

Powerful Data Extraction Capabilities

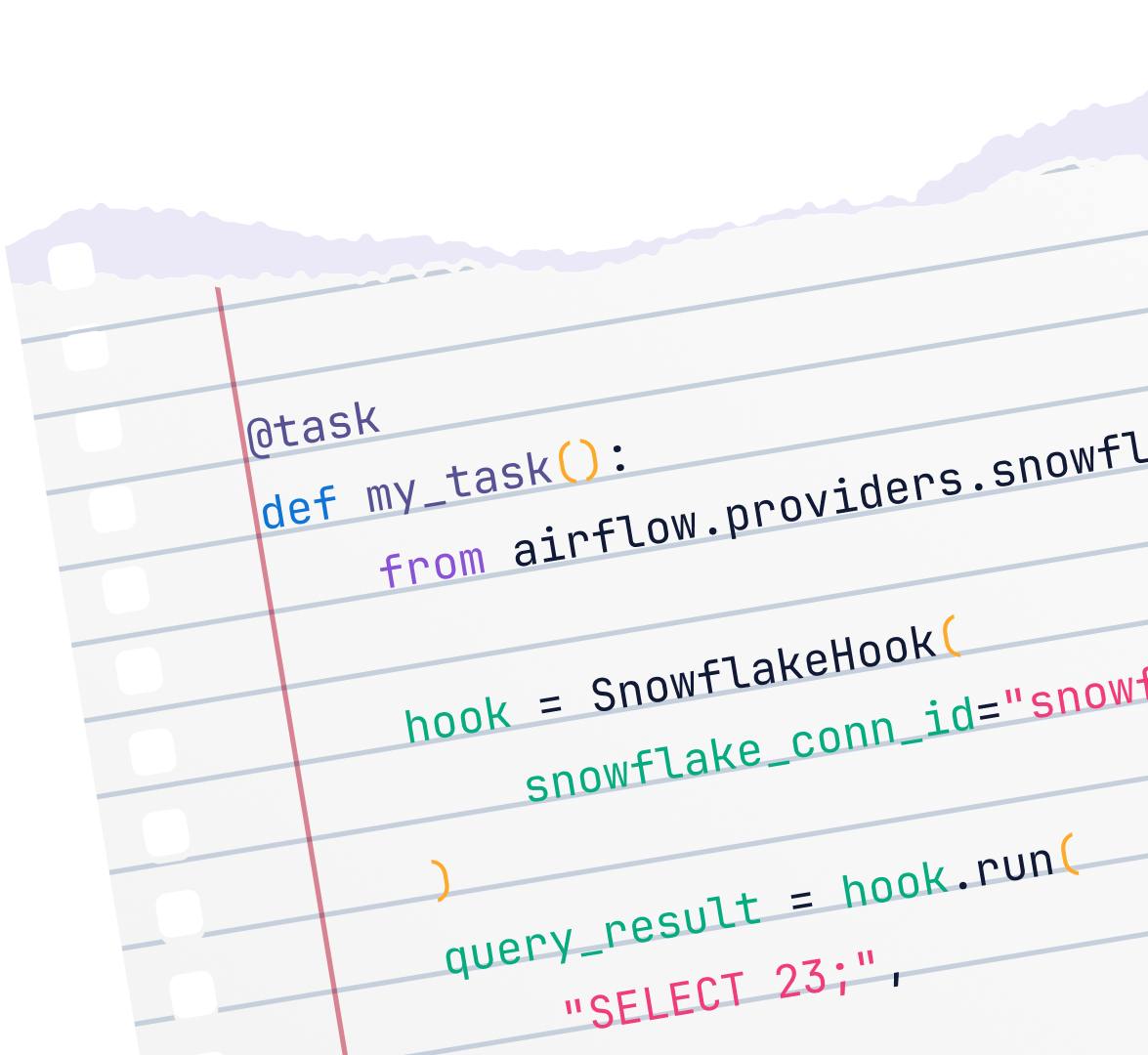

Astro leverages extensive library of pre-built operators and hooks to extract data from a wide array of sources, making the ETL process seamless and efficient. Whether it's relational databases, NoSQL databases, cloud storage services, APIs, or flat files, Astro connects to your data wherever it resides.

Flexible Data Transformation

Astro provides unparalleled flexibility in defining transformation tasks within your Airflow DAGs (Directed Acyclic Graphs). Supporting both ETL and ELT methodologies, Astro allows you to choose the approach that best fits your data strategy.

Efficient Data Loading

Astro enhances the data loading phase of your ETL and ELT pipelines by offering reliable and efficient mechanisms to load transformed data into your target systems. This ensures the ETL or ELT cycle is completed effectively and accurately.

Comprehensive Orchestration and Monitoring

Astro provides an enterprise-grade orchestration platform with advanced monitoring features, enhancing your ability to manage re effectively. By extending Airflow's capabilities, Astro offers a robust and user-friendly experience for orchestrating complex data pipelines.

Scalability, Security, and Compliance

Astro ensures your ETL or ELT processes are scalable, secure, and compliant with industry standards, solidifying the meaning and importance of ETL or ELT within your organization.

Why Choose Astronomer for ETL and ELT

| Astronomer | Traditional ETL/ELT Tools | |

|---|---|---|

Unified Orchestration PlatformCentralized environment orchestrating all ETL and ELT stages within a single platform. |  |  |

Python-based CustomizationFully customizable workflows with Python DAGs, custom operators, and flexible configurations. |  |  |

Kubernetes-based ScalabilityDynamically scalable with Kubernetes; efficient for a wide range of workloads. |  |  |

Extensive Library of Pre-Built IntegrationsSeamless integration with a wide range of module and provider packages for popular third-party tools. |  |  |

Open-source ExtensibilityBuilt on open-source Apache Airflow, enabling community-driven enhancements and integrations. |  |  |

Advanced dbt integrationRun and observe dbt projects alongside your Airflow workflows, simplifying orchestration, deployment, and scaling of data transformations on a fully-managed platform. |  |  |

Task Optimized Worker QueuesAssign tasks to specific worker sets based on computational needs for optimized resource utilization. |  |  |

Centralized ObservabilityComprehensive pipeline-level data observability with SLA dashboards, real-time alerts, and full lineage tracking. |  |  |

Dynamic Resource AllocationEfficient resource management ensures high performance and quick adaptation to workload changes. |  |  |

Enhanced Data Quality AssuranceImplement comprehensive data validation and quality checks within your transformation workflows. |  |  |

Cost EfficiencyLower costs by consolidating multiple tools and leveraging an open-source foundation. |  |  |

Security & ComplianceBuilt-in security features like encryption, RBAC, and SSO. |  |  |

Resources to Accelerate Your ETL and ELT Journey

Get full access to our library of ebooks, case studies, webinars, blog posts, and more.

Explore More Use Cases

FAQs

What is Astronomer and how does it simplify ETL and ELT processes?

Astronomer is a data orchestration platform built on Apache Airflow that simplifies ETL and ELT by automating and streamlining workflows across multiple sources and destinations. With built-in integrations and features like task management and optimized compute, Astronomer reduces complexity and enhances efficiency for data teams.

How does Astronomer help manage ETL and ELT pipelines?

Astronomer not only automates and orchestratesETL and ELT pipelines but also integrates with multiple data sources, handles transformations, scales dynamically, and provides comprehensive monitoring and error handling to ensure smooth pipeline execution.

What are the key differences between ETL and ELT, and when should I use each?

ETL is better suited for processing data before storage, while ELT is ideal for leveraging modern data warehouses to handle large datasets post-loading.

How does Astronomer compare to traditional ETL and ELT tools?

Astronomer consolidates ETL and ELT processes into one system, reducing the need for multiple tools, lowering costs, and offering more flexibility.

What are the benefits of using Astronomer for managing ETL and ELT workflows?

Astronomer simplifies workflows, offers dynamic scaling, improves efficiency, and reduces costs, all while providing real-time monitoring and security.

What are the stages of ETL?

- Extract

- Definition: Collecting raw data from various sources such as databases, applications, files, or APIs.

- Process: Data is gathered from one or more source systems, regardless of its raw format

- Transform

- Definition: Converting the extracted data into a suitable format or structure for analysis.

- Process Includes:

- Data Cleaning: Removing errors, duplicates, and inconsistencies.

- Data Standardization: Converting data into a common format.

- Data Enrichment: Enhancing data by adding relevant information.

- Applying Business Rules: Implementing specific calculations or logic as per organizational needs.

- Load

- Definition: Importing the transformed data into a target system like a data warehouse or data lake.

- Process: Data is loaded in a way that optimizes performance and ensures accessibility.

What are the stages of ELT?

- Extract

- Definition: Collecting raw data from various sources such as databases, applications, files, or APIs.

- Process: Data is gathered regardless of format or structure to ensure a comprehensive dataset.

- Load

- Definition: After extraction, raw data is immediately loaded into the target system, such as a data warehouse or data lake.

- Process: Data is ingested in its native format

- Transform

- Definition: Transformations occur within the target system after the data is loaded.

- Process Includes:

- Data Cleaning: Removing errors, duplicates, and inconsistencies.

- Data Standardization: Converting data into a common format.

- Data Enrichment: Enhancing data by adding relevant information.

- Applying Business Rules: Implementing specific calculations or logic as per organizational needs.