Astronomer + Databricks

Build, Run, & Observe AI-Ready Pipelines with Astro.

The unified DataOps platform powered by Apache Airflow® for orchestrating end-to-end AI/ML workflows while ensuring data quality and optimizing costs.

Simplify and scale your Databricks pipelines with Astro

BUILD & RUN AI PIPELINES

Build Modern AI/ML Workflows on Databricks.

Orchestrate scalable ML pipelines on Databricks with GPU-enabled compute and MLflow integration—reducing deployment times while delivering production-grade models

Monitor end-to-end pipelines

Gain complete visibility across your data ecosystem.

Track your entire data pipeline from source to Databricks to downstream applications in a single, unified interface—so your team can quickly troubleshoot issues, reduce MTTR by 60%, and confidently deliver reliable data to stakeholders.

Optimize Databricks costs

Control your Databricks spend with Astro Observe.

Gain visibility and control over your Databricks spend with Astro Observe. Track unit consumption by cluster, task, or team to identify optimization opportunities and lower your Databricks bill.

Ensure mission-critical reliability

Deploy intricate Databricks workflows with confidence.

Cut infrastructure management time by 75% with Astro’s enterprise-grade reliability and high availability. Free your team to create business value while ensuring consistent data delivery.

Take a 30-second tour of Astro

See how easy it is to build ETL/ELT pipelines with Astronomer and Databricks

Organizations from every industry transform their data operations with Astro & Databricks.

Use Cases

Tackle your toughest data problems with Astro.

Orchestration

Orchestrate end-to-end ML workflows across Databricks from feature engineering to model deployment and monitoring.

ETL/ELT

Build scalable data pipelines that transform data using Databricks' Spark processing and make it available for analytics.

Operational Analytics

Orchestrate data flows through Databricks processing to dashboards for timely, actionable business insights.

Infrastructure Operations

Schedule and configure Databricks infrastructure operations through Airflow DAGs for optimal performance and cost efficiency.

Frequently Asked Questions

What advantages does Airflow have over Databricks Workflows?

Airflow provides cross-system orchestration beyond Databricks, with complex DAG structures and conditional logic not available in Databricks Workflows. With 1,900+ pre-built modules, you can orchestrate Databricks alongside S3, Snowflake, APIs, and other systems within a single pipeline. Airflow's code-based approach enables version control, testing, and CI/CD integration. Airflow also offers unlimited scalability compared to Databricks Workflows' limits of 100 tasks per job, 2,000 concurrent tasks per workspace, and 10,000 jobs per workspace per hour. Most organizations use Airflow to orchestrate Databricks operations alongside their broader data pipeline, creating a unified control plane that extends beyond what Databricks Workflows can handle alone.

What makes Astro better than open-source Airflow for Databricks?

Astro delivers enterprise-grade reliability with 70% higher uptime and automatic scaling for varying workload demands, eliminating infrastructure management overhead. It provides integrated Databricks cost monitoring and developer productivity tools like the Astro CLI and Cloud IDE. Organizations typically see a 45% reduction in costs when moving from self-managed Airflow to Astro, according to Forrester's Total Economic Impact study.

What's the implementation timeline for Astro with Databricks?

Most organizations implement Astro with existing Databricks environments in 2-4 weeks, including initial setup, migration, and cost optimization. Many customers report completing migrations within a two-week sprint, as demonstrated by Campspot who moved from custom batch jobs to Astro and reduced processing time from hours to minutes. Implementation is streamlined with Astronomer's professional services and detailed documentation.

How does Astro help optimize Databricks costs?

Astro Observe provides unified visibility into Databricks Units with attribution to specific data products, DAGs, tasks, and teams. It includes cost anomaly detection to help teams spot unexpected spikes or unusual changes and identify optimization opportunities. The integrated dashboard displays historical trends, helping teams implement data-driven cost governance while maintaining performance for critical workloads.

How does Astro Observe help with Databricks pipeline monitoring?

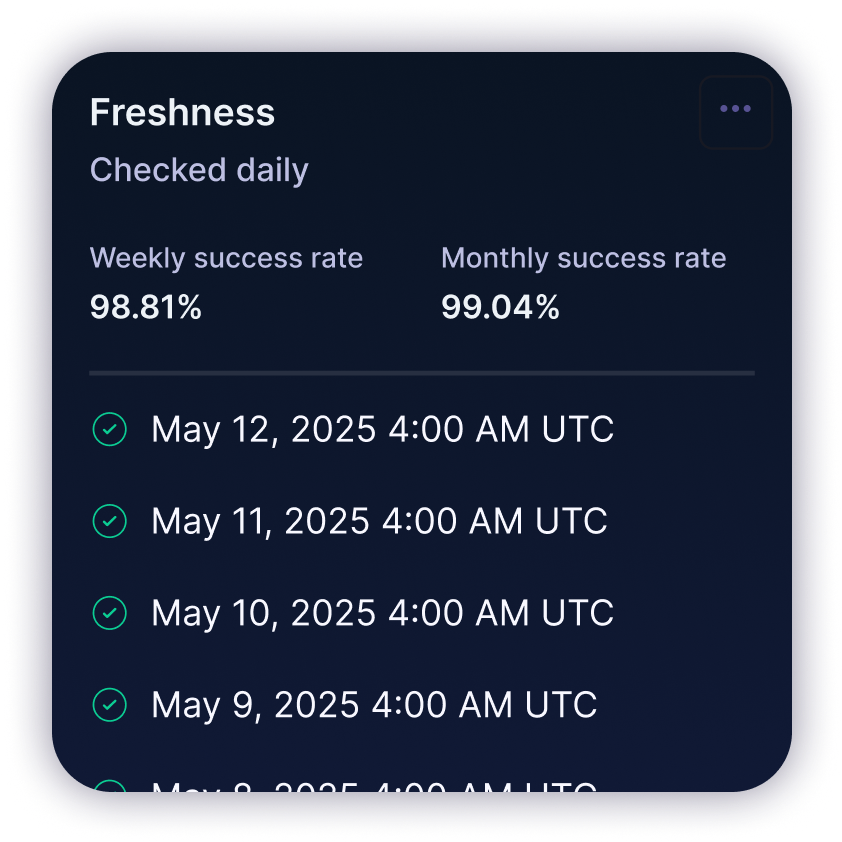

Astro Observe provides a comprehensive single pane of glass for your Airflow environment, offering visibility into the health and performance of data products to ensure they meet business objectives. It delivers pipeline-level data observability with SLA dashboards for setting data freshness and delivery thresholds, plus real-time alerts when issues arise. Full lineage tracking helps teams quickly pinpoint activity and ownership, track dependencies across the data supply chain, and ensure swift remediation even in complex workflows.

How does Astro enhance data governance for Databricks?

Astro strengthens Databricks data governance with full supply chain visibility of data products and dependencies, comprehensive lineage tracking across DAGs and deployments, and DAG versioning for AI compliance. These capabilities ensure reproducibility of ML models and proper audit trails. The integration respects Unity Catalog security controls while adding a layer of orchestration governance that helps organizations meet compliance requirements for their Databricks data operations.

How does Astro integrate with Databricks for ML workflows?

Astro seamlessly integrates with Databricks' ML capabilities through specialized operators and hooks. It enables remote worker execution on Databricks GPU clusters for compute-intensive tasks while maintaining central orchestration. Asset-based workflows enable more timely ML pipelines through asynchronous scheduling and event-driven triggers, while DAG versioning provides complete model lineage for compliance and governance. Event-driven scheduling can trigger pipelines automatically when new data arrives, accelerating the ML workflow.

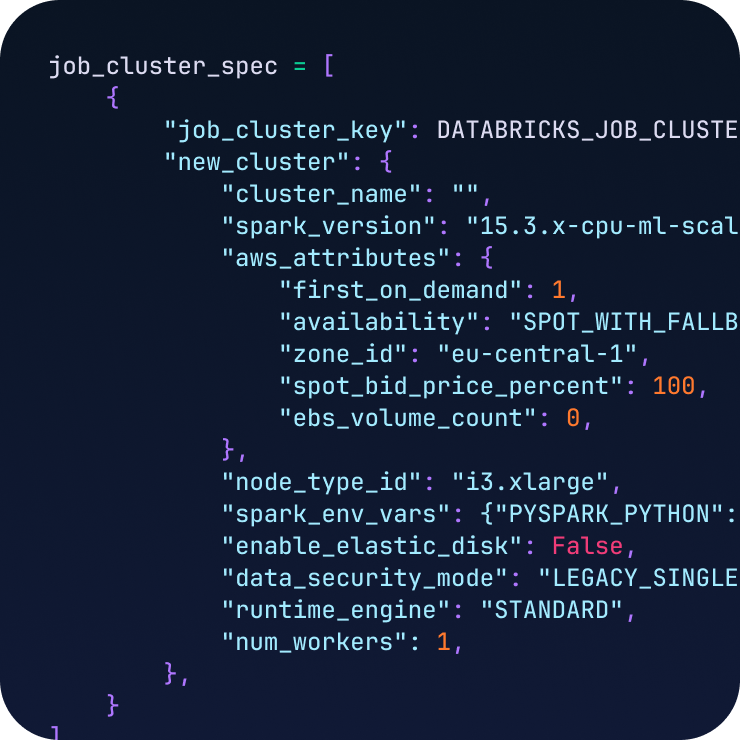

What's the difference between job clusters and all-purpose clusters for cost optimization?

Job clusters start at $0.15/DBU compared to interactive workloads at $0.40/DBU—a 62% cost reduction. Airflow's DatabricksWorkflowTaskGroup automatically provisions job clusters that terminate after completion, eliminating idle costs. This delivers substantial savings for production ETL and ML workloads that don't require interactive development capabilities.