Workflows then agents: the practical approach to enterprise AI

10 min read |

We’ve seen countless teams chase AI, only to get lost in its complexity without delivering value. The truth? Most organizations don’t need agents talking to agents to get immediate ROI out of generative AI – they need reliable LLM workflows that solve real business problems today. That’s why we’re open-sourcing our AI SDK for Apache Airflow, distilling battle-tested patterns that have helped companies achieve transformative results within days.

–

Last week, a CTO asked me to help his team build an “agent swarm” for processing customer support tickets. After digging deeper, it became clear they didn’t need autonomous agents interacting in complex choreography. They needed reliable, observable workflows leveraging LLMs for specific tasks.

The hype cycle around AI agents is reaching fever pitch with some fearing that we’re entering the trough of disillusionment. Every vendor promises autonomous systems that can reason, plan, and act with minimal human intervention. It’s an exciting vision of the future, but one that’s causing many teams to overlook the pragmatic, immediate value of well-orchestrated LLM workflows.

The new data engineering frontier

The AI revolution isn’t just making existing jobs more efficient—it’s fundamentally expanding what’s possible. Data engineers, once confined to moving and transforming data, now find themselves at the center of all sorts of data and operational processes. According to our State of Airflow 2025 Report that surveyed over 5,000 data engineers (the largest data engineer survey ever conducted), 85% of organizations are now using Airflow for external and revenue generating data products.

Previously, if you wanted to analyze unstructured data at scale—think customer support tickets, product reviews, or sales call transcripts—you needed specialized ML engineers, data scientists, and months of model development. Now, with foundation models handling the complexity of understanding language, data engineers can build pipelines that extract structured insights from unstructured sources in hours, not months.

This shift represents a massive opportunity. Every executive wants to run their business more efficiently, and data engineers are uniquely positioned to deliver this value through LLM-enhanced workflows. But the gap between hype and practical implementation remains wide.

We’re starting to see patterns in our customer base and broader community. According to a research study by RAND, just 20% of AI projects will make it to production in 2025. But in 2024, 35% of experienced Airflow users and 55% of data teams using Astro had AI in production.

For example, a fintech company running on Astro grew to $100m in ARR in 2 years by augmenting their go-to-market activities with LLMs, a large portion of which materialized in the form of LLM workflows. This includes everything from automatically personalizing cold outreach to generating product reviews based on call transcripts. They’ve continued to take the approach of building out simple but effective LLM workflows to continue efficiently scaling to a $10b company.

Go with the simplest solution

Building multi-agent systems before mastering basic LLM workflows is like implementing a full microservices pattern before even writing your first API. It’s putting architectural complexity ahead of proven patterns and business outcomes.

The allure is understandable. The demos are impressive: agents that seem to think like humans, breaking down problems, gathering information, and collaborating to reach solutions. But beneath the surface lies a web of complexity that few organizations are equipped to manage in production.

Anthropic’s engineering team highlights this challenge in their research: “When building applications with LLMs, we recommend finding the simplest solution possible, and only increasing complexity when needed. This might mean not building agentic systems at all.” Their advice is crystal clear - complexity should only be added when it demonstrably improves outcomes.

A B2B marketplace was struggling with product onboarding due to inconsistent supplier data. By implementing LLM workflows to standardize content and extract structured information, they achieved what their VP of Engineering called a miracle—onboarding “more products in the last few months than in the previous 10 years.” The key wasn’t agent autonomy but carefully orchestrated LLM tasks integrated into their existing systems.

The reality is that most business problems don’t require autonomous agent swarms, at least not yet. They require reliable, observable workflows where LLMs handle specific tasks within well-defined boundaries. These workflows leverage what LLMs do best—understanding and generating natural language—while maintaining the control and predictability that business systems demand.

LLM workflows in practice

What exactly is an LLM workflow? At its core, it’s an orchestrated process that incorporates language models as specific steps within a larger business operation. Each LLM call has clear inputs, expected outputs, and sits within a predictable execution path.

According to Anthropic’s research, the fundamental distinction between workflows and agents is their level of autonomy and predictability:

Workflows are systems where LLMs and tools are orchestrated through predefined code paths. They offer control, consistency, and observability for well-defined business processes.

Agents, in contrast, are systems where LLMs dynamically direct their own processes and tool usage, maintaining control over how they accomplish tasks. They provide flexibility but introduce less predictability.

Consider these key differences:

| LLM Workflows | Agent Architectures |

|---|---|

| Predictable execution paths | Emergent, potentially unpredictable behavior |

| Focused on specific business outcomes | General problem-solving approach |

| Built on proven orchestration patterns | More experimental architectures |

| Production-ready observability | Limited monitoring capabilities |

| Incremental complexity | Often requires substantial initial complexity |

Anthropic outlines several common workflow patterns that offer a balance of reliability and intelligence:

- Prompt chaining - Sequential steps where each LLM processes the output of the previous one

- Routing - An LLM classifier directs inputs to specialized downstream processes

- Parallelization - Multiple LLM calls run simultaneously with results aggregated after

- Orchestrator-workers - A central LLM breaks down tasks for specialized worker LLMs

- Evaluator-optimizer - One LLM generates responses while another evaluates and refines them

The power of these patterns lies in their composability. They can be combined and customized to address complex business needs while maintaining the control and observability that production systems require.

Most importantly, these patterns start with what Anthropic calls the “augmented LLM” - a language model enhanced with capabilities like retrieval, tools, and memory. This building block forms the foundation of both workflow and agent architectures, with the key difference being how much autonomy the system has in utilizing these capabilities.

A social media platform on Astro saved upwards of up to $500k per year previously dedicated to manual content moderation and classification. Their approach? A series of interconnected workflows where these “augmented LLMs” handle specific tasks like content categorization, sensitive information detection, and quality assessment. Each workflow is measurable, monitorable, and maintains human oversight where needed.

Organizations have already found success augmenting well-defined workflows with AI today. These workflows provide immediate value while establishing the foundation for more sophisticated agent architectures as use cases and technologies mature.

The orchestration advantage

What these success stories share is a fundamental insight: orchestration matters as much as the LLM itself. The value comes not just from what the model can understand or generate, but from how its capabilities are integrated into business processes.

This is where Apache Airflow has emerged as a critical foundation. Already trusted for data orchestration across thousands of organizations, Airflow provides the reliability, observability, and operational maturity that production AI systems demand.

But until now, teams have had to develop their own patterns for integrating LLMs into Airflow workflows. Each organization reinvented similar solutions, writing custom code to handle prompt management, output parsing, and error handling. The lack of standardization has slowed adoption and made it difficult to share best practices across teams.

Introducing the Airflow AI SDK

To bridge this gap, we’ve distilled these battle-tested patterns into a practical SDK that extends Apache Airflow with LLM-specific capabilities built on Pydantic AI. The Airflow AI SDK provides a declarative way to incorporate LLMs into workflows, with built-in support for structured outputs, conditional branching, and agent integration when needed.

The SDK follows Airflow’s taskflow pattern with three key decorators:

@task.llm: Define tasks that call language models with automatic output parsing@task.agent: Orchestrate multi-step AI reasoning with custom tools@task.llm_branch: Change workflow control flow based on LLM outputs

This approach maintains the familiarity of Airflow while adding powerful AI capabilities. Consider this example of analyzing product feedback:

class ProductFeedbackSummary(ai_sdk.BaseModel):

summary: str

sentiment: Literal["positive", "negative", "neutral"]

feature_requests: list[str]

@task.llm(

model="gpt-4o-mini",

result_type=ProductFeedbackSummary,

system_prompt="Extract the summary, sentiment, and feature requests from the product feedback.",

)

def summarize_product_feedback(feedback: str | None = None) -> ProductFeedbackSummary:

"""

This task summarizes the product feedback. You can add logic here to transform the input to the LLM, to (for example) perform PII redaction.

"""

feedback_anonymized = mask_pii(feedback)

return feedback_anonymized

With just a few lines of code, this task calls an LLM, provides appropriate context, enforces a structured output format (using Pydantic models), and integrates with Airflow’s error handling. The same pattern can be applied to countless use cases, from content moderation to data extraction to decision support.

For more complex scenarios, the SDK also supports agent-based workflows:

deep_research_agent = Agent(

"o3-mini",

system_prompt="You are a deep research agent...",

tools=[duckduckgo_search_tool(), get_page_content],

)

@task.agent(agent=deep_research_agent)

def deep_research_task(dag_run: DagRun) -> str:

"""

This task performs a deep research on the given query.

"""

query = dag_run.conf.get("query")

print(f"Performing deep research on {query}")

return query

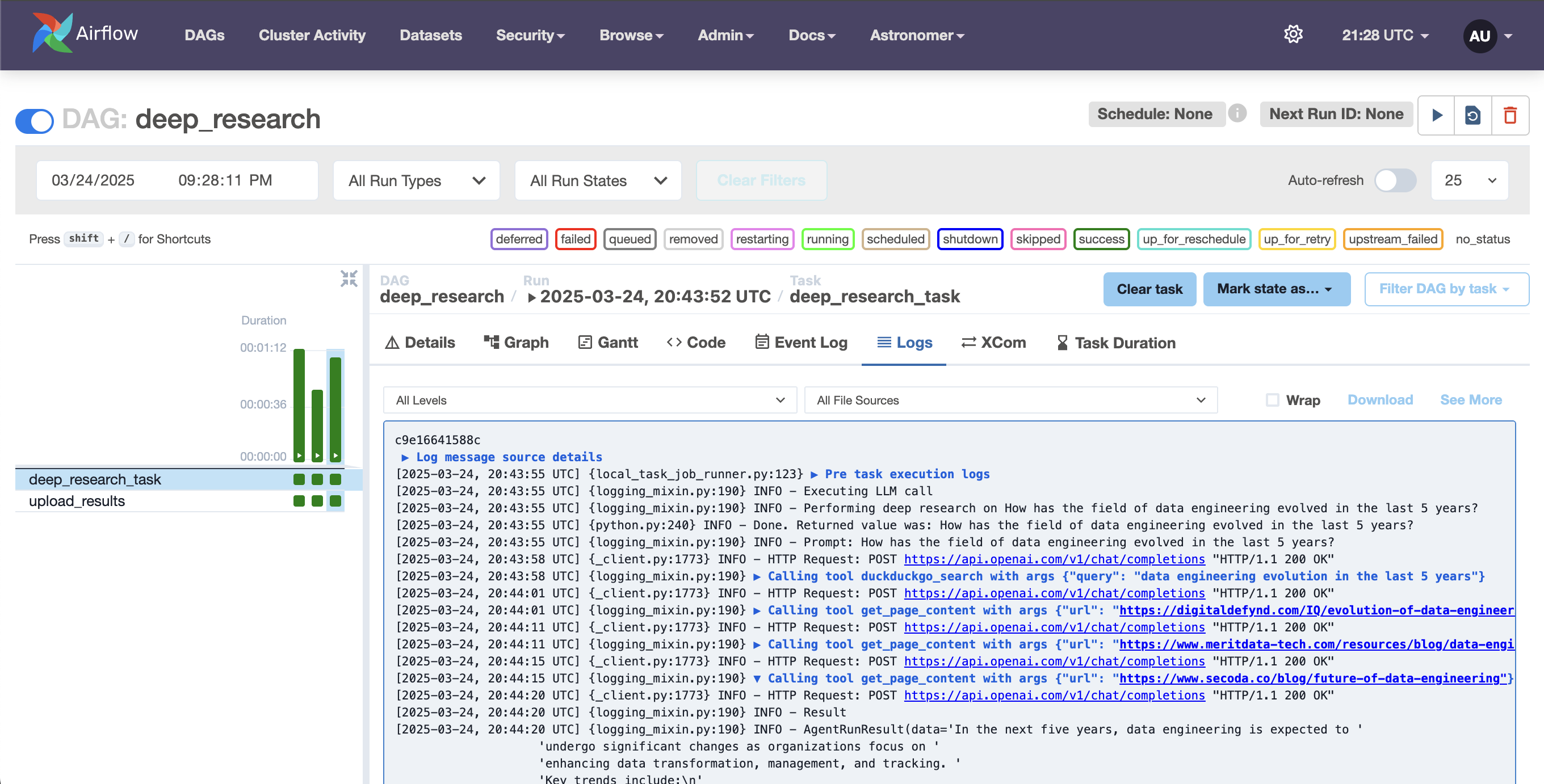

The best part: Airflow gives you natural logging and observability so you don’t need to turn to a separate LLM observability tool. The AI SDK automatically organizes each tool call into its own log group so you can see exactly what your agent is doing and drill into specific steps.

This approach gives you the best of both worlds: agent capabilities when needed, within the reliable orchestration framework that Airflow provides.

Start simple, scale gradually

The most successful AI implementations start with focused use cases and proven patterns. By building on orchestration fundamentals, you can deliver immediate business value while establishing the foundation for more sophisticated workflows.

As your confidence grows and use cases expand, you can gradually introduce more complex patterns—agent-based tasks, multi-step reasoning, dynamic branching based on LLM outputs. But each step builds on a solid foundation of operational reliability and business value.

Don’t chase agent complexity prematurely. The real opportunity lies in connecting LLMs to your existing business processes through reliable, observable workflows. The Airflow AI SDK provides a path to do exactly that, leveraging the best of both worlds: cutting-edge AI capabilities with production-grade orchestration.

The future of AI in business isn’t entirely autonomous agent swarms making independent decisions. It’s thoughtfully designed workflows where LLMs handle the tasks they excel at, integrated into systems that maintain human oversight, operational reliability, and clear business outcomes. That future is available today.

To learn more about the AI SDK and get started, check out the GitHub repository. We’re also hosting a webinar on June 26, 2025 where we’ll go deep on the AI SDK and see how LLM workflows are built at leading tech companies.