The Airflow Year in Review 2022

7 min read |

This was an exciting year for Apache Airflow®. With more than 5,000 commits and the total number of contributors eclipsing 2,300, Airflow is more vibrant than ever.

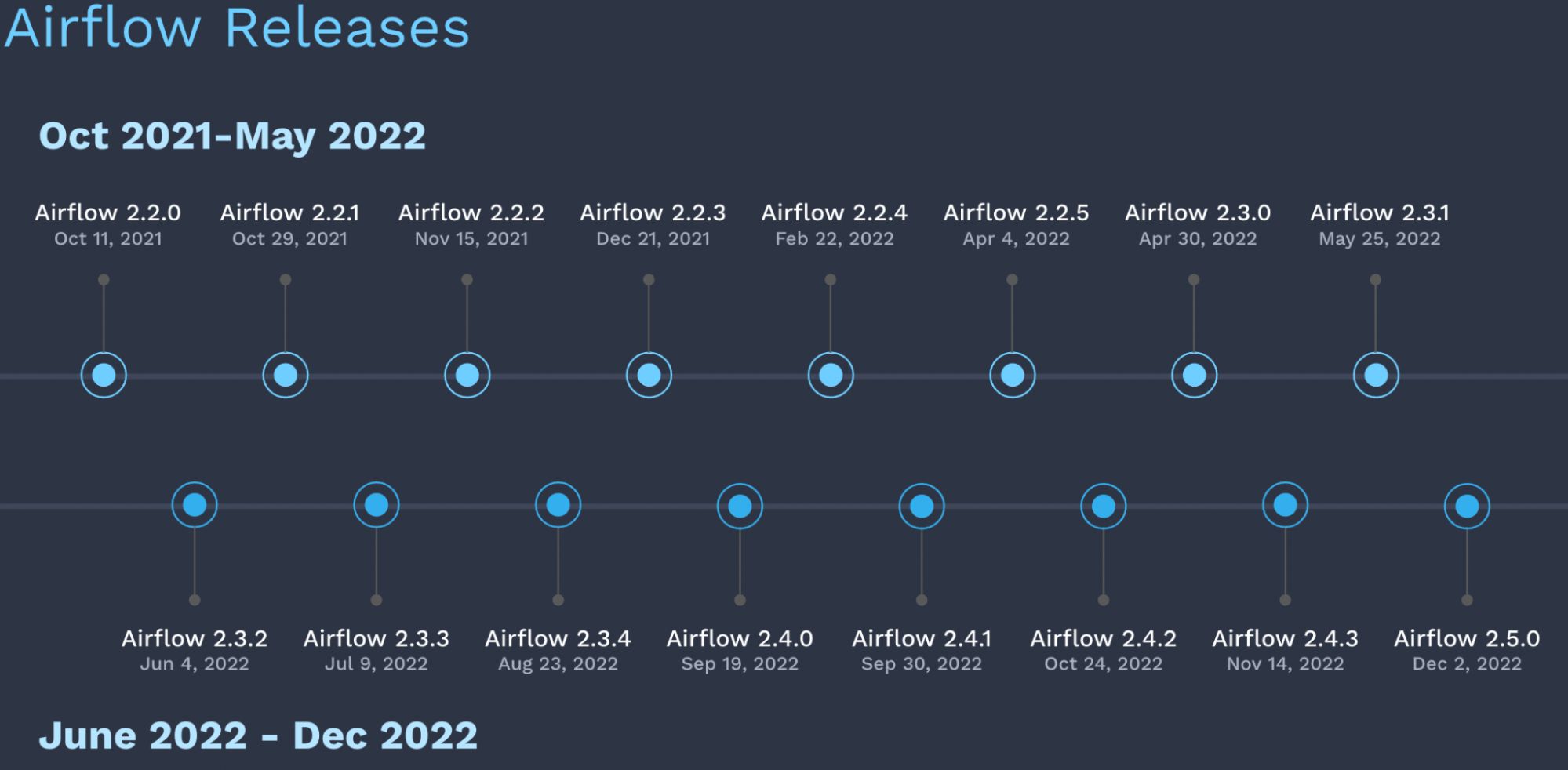

As we look back at the year in Airflow, it’s important to remember that a consistent and reliable release process underpinned so much of what was released to the community. In 2022, there were 12 releases of core Apache Airflow®, with the community adopting a roughly biweekly release cadence for the second half of the year. This is in addition to monthly provider releases, as well as releases of the official helm chart.

Figure 1: Airflow releases since October 2021.

With all of these releases came great new features that expanded Airflow’s use cases, made DAG authoring simpler, and improved operations and monitoring.

Data-Dependent Scheduling

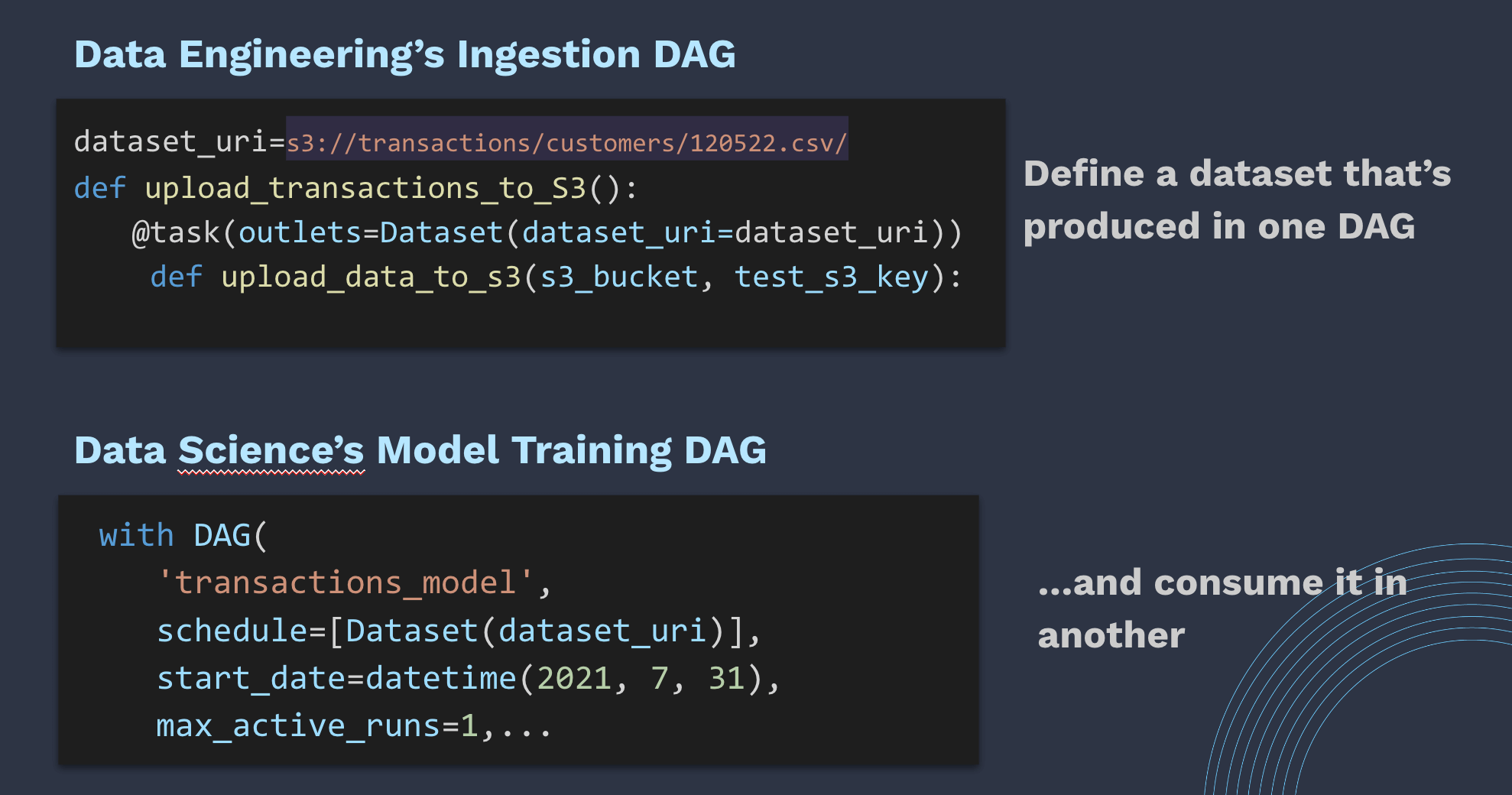

Perhaps the year’s most exciting development was the arrival of data-dependent scheduling in Airflow. Starting with the release of Airflow 2.4 in September, users can now define dataset objects as part of their DAGs, and schedule DAGs based on changes to underlying datasets.

Data-dependent scheduling makes it easier for data teams to collaborate. The work of data engineers, data scientists, and data analysts depends on DAGs that others have written — a data scientist, for example, might be unable to train a fraud detection model without the transactions table being made by an analytics engineer, who couldn’t create that table without the ingestion DAGs written by a data engineer, and so on.

Figure 2: Data-dependent scheduling allows for DAGs to be scheduled based on updates to underlying data.

Previously, teams would need to coordinate their DAGs with a series of sensors, trigger DAG Run operators, and other custom logic to govern the underlying process flow. The new Dataset class that’s part of data-dependent scheduling allows users to define and view data dependencies and easily implement event-driven DAG schedules. It also allows for larger DAGs to be broken into smaller and more maintainable micropipelines.

Dynamic Task Mapping

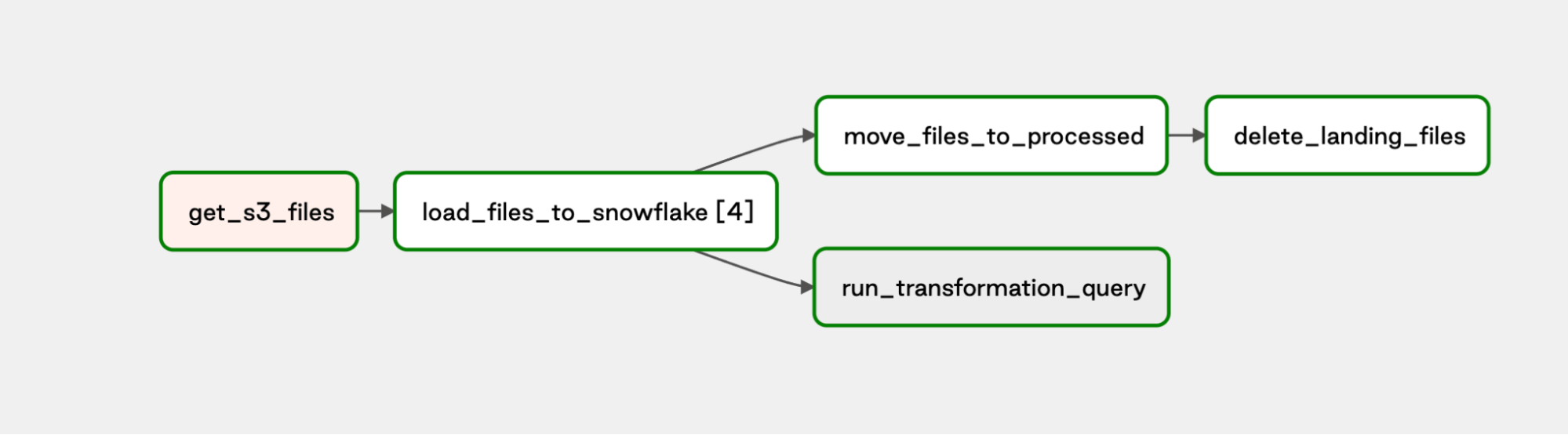

Dynamic task mapping, which debuted with Airflow 2.3 in April, was one of the most anticipated features in Airflow. It gives users the ability to generate tasks dynamically at run-time based on an external condition — something that can change each time a workflow runs.

Figure 3: In this example, the load_files_to_snowflake task is mapped over four files and generates a task for each file.

In Figure 3, the task load_files_to_snowflake contains mapped indexes for a list of four tasks that were created dynamically based on the number of files at runtime in S3. As with the MapReduce programming model, the number of files mapped over can change each time, but no changes are required to the underlying DAG code.

Airflow 2.4 and 2.5 expanded this feature by introducing support for mapping over task outputs, task groups, cross products, and just about every condition you come across when working with data.

Astronomer Staff Software Engineer T.P. Chung and Astronomer Director of Airflow Engineering Ash Berlin-Taylor played instrumental roles in the design and development of dynamic task mapping for the Apache Airflow® project. Chung notes that at a low level, this feature abstracts much of the work authors used to perform on their own — e.g., by designing for loops — and moves it into Airflow. This means dynamic tasks are now a fully supported Airflow feature, with complete observability, task instance history, and task state management, rather than a workaround that a user has to implement.

“Abstraction of this kind is what Airflow has always done,” Chung says, citing Airflow DAGs, which abstract task dependencies. “And with every new abstraction, Airflow keeps making our lives easier.”

The Airflow UI

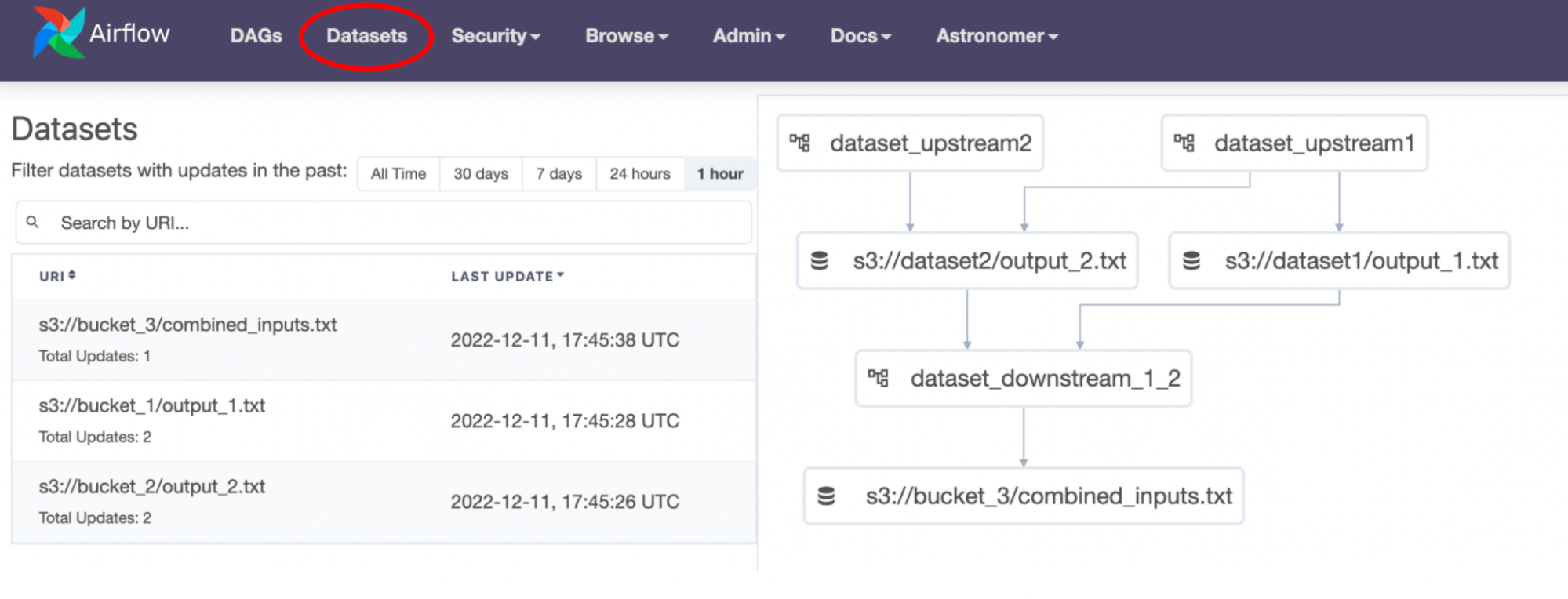

This year, new ways to write DAGs were complemented by UI changes that made monitoring and organization of DAGs easier. Airflow 2.3 introduced the Grid view as part of what was needed to support dynamically mapped tasks. The Grid view, a replacement for the Tree view, makes it easier to find tasks that failed, access logs, and do all the other things folks have to do after deploying a DAG. A few months later, Airflow 2.4 added a new Datasets view that displays all of the datasets in your Airflow environment and a graph showing how your DAGs and datasets are connected.

Figure 4: The Datasets view shows how DAGs and datasets are related.

Better organization of task states, corresponding logs, and the datasets that DAGs create and apply helps teams and users work together, but it’s not always enough. Sometimes, there’s context around a specific run, DAG, or part of a DAG that needs to be added. In December, Airflow 2.5 addressed this need with the release of commenting functionality, which lets users add comments to specific task instances and DAG runs.

Astronomer Open Source, Built on the Shoulders of Airflow

As Airflow matures as an open-source project, it becomes a platform on which additional open-source projects can be built. There are projects throughout the ecosystem for everything from writing DAGs (for example, Gusty and DAG Factory) to UI enhancements. In the same way, here at Astronomer we’re building Astro OSS as a way to work collaboratively and quickly with the Airflow community around next-generation initiatives, all designed to complement the core of Apache Airflow®.

Here are the open-source initiatives we focused on in 2022:

Astro Python SDK

Released in August, the Astro Python SDK is an Apache 2.0-licensed DAG authoring framework that can be installed into any Airflow environment and that removes barriers to entry for folks writing DAGs. By abstracting away Airflow-specific syntax, Python- and SQL-savvy users can focus on what they know best — writing transformation logic in Python or SQL. Out of the box, the SDK has support for common ELT operations like loading files into data warehouses, merging tables, checking data quality, and switching between Python and SQL. The Astro Python SDK hides not only the boilerplate Airflow code, but also any syntax specific to the underlying database being used.

Since August, minor updates of the Astro Python SDK have been released every month. The SDK now supports standard ELT operations for Snowflake, BigQuery, Postgres, Redshift, S3, Blob Storage, GCS, and MinIO, with support for more data objects, file types, and functions coming. At Astronomer, we use the Astro Python SDK to power our own Astro Cloud IDE, and we’re excited to see what the community builds on top of and around the SDK to make DAG authoring more accessible to as many folks as possible.

Astronomer Providers

The Airflow 2.2 release in October 2021 introduced the triggerer – a new Airflow core component that allows users to run tasks asynchronously.

Deferrable operators take advantage of the triggerer to release their worker slot while waiting for work to be completed, saving the user resource costs and increasing the scalability of Airflow. In 2022, the community built deferrable operators for systems that tend to handle long-running tasks like Databricks, Dataproc, and more. Additionally, Astronomer Providers were released in July, featuring more than 60 deferrable Airflow modules that are maintained by Astronomer. Astronomer Providers are Apache-2.0 licensed, can be installed into any Airflow environment, and work as drop-in replacements for their synchronous counterparts.

The Year Ahead

The past year saw 3,000 pull requests merged into Airflow, so the items highlighted here are really just an executive summary of the full activity and progress of Airflow in 2022. The difference between what you can do with Airflow in December of 2022 versus a year ago is extraordinary. It was a year of rapid, ceaseless innovation, with new features building on one another and their benefits compounding to transform how teams connect to their systems, author DAGs, and collaborate.

None of this would have been possible without the Airflow community and the tireless work that happens behind the scenes to test releases, report bugs, and so much more. And many of the updates in 2022 set the stage for 2023, particularly around increasing support for data-dependent functionality in Airflow and simplifying the DAG-authoring experience (there are already signs of this with the recent AIP-52 proposal). Stay tuned for what looks to be another outstanding year for the Airflow project.