Introducing New Astro CLI Commands to Make DAG Testing Easier

8 min read |

At Astronomer, we take pride in providing a world-class development experience for Airflow users. Since our origin as a company, the Astro CLI has been at the core of how we deliver that experience.

The Astro CLI is an open source binary that provides a self-contained local development environment in which you can build, test, and run Airflow DAGs and tasks. If you’re an Astronomer customer, you can also use the Astro CLI to deploy seamlessly to Astro in the cloud. But even if you aren’t, the Astro CLI is still the best way to run Airflow locally as you build and debug your pipelines.

Introducing New Astro CLI Commands

Testing DAGs locally prior to promoting them to production is central to the development workflow of any user. Today, we’re excited to announce two CLI commands that enhance this experience for the community: astro dev parse and astro dev pytest.

In short, the astro dev parse command parses your DAGs to ensure that they don’t contain any basic syntax or import errors and that they can successfully render in the Airflow UI. This accelerates development by enabling your team to identify errors quickly and ahead of time. It also significantly reduces the time it takes to debug your pipelines – in many cases, by hours.

The second command is astro dev pytest which provides a native way to add pytests to your Astro project and run your DAGs against those tests locally. When you run this command, the CLI shows you the results of those tests in your terminal. Now, you can use this command before deploying your code to production, to ensure that your DAGs comply with your team’s requirements. If you’re new to Airflow and don’t have pytests built already, the Astro CLI includes a basic pytest by default that tests for things like unique DAG IDs, syntax errors, required arguments, and more. In this way, astro dev pytest contributes to keeping your production pipelines healthy and secure.

To understand why these commands are so useful, it helps to understand how most DAG authors write and test DAGs in Airflow today. We’ll use the Astro CLI as an example. Even though it simplifies a lot, some tasks are still time-consuming – and, when it comes to debugging simple errors, frustrating, too.

DAG Testing in Airflow Today

To test a single DAG, most Apache Airflow® users first have to manually install an Airflow environment on their local machine – complete with a locally running executor as well as the Airflow scheduler, webserver, triggerer, and metadata database. All just so they can sanity-check their DAG code.

This is needlessly complicated and can take hours, if not days. By contrast, the Astro CLI allows you to provision a local environment with all required Airflow components in less than five minutes. This simplifies the process of building, testing, and deploying your DAGs. It also lowers the barrier to new users getting started.

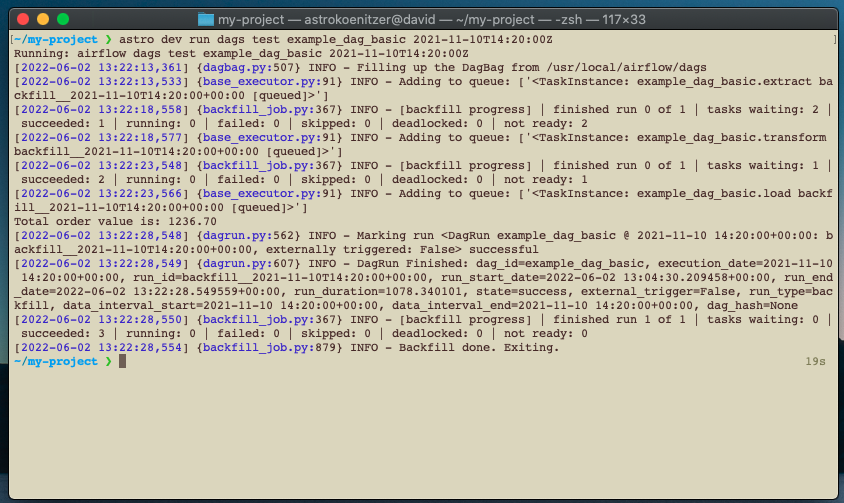

Once you have a local Airflow environment running, a data or DevOps engineer might want to test DAGs via the command line. To do this today, that developer can use only the airflow tasks test <dag_id> <task_id> or airflow dags test <dag_id> commands that are available in the Airflow CLI. In the Astro CLI, Airflow CLI commands can be run locally with astro dev run. That means the user can run astro dev run dags test or astro dev run tasks test to test a specific DAG or task from the Astro CLI. astro dev run <dags or tasks> test is a useful command, but it has at least one drawback, it can take several minutes to spin up when you run it the first time: each test can take several minutes to run.

When you run astro dev run dags test <dag_id> <task_id> or astro dev run tasks test <dag_id> , the Astro CLI needs to spin up all Airflow components locally. While this may only take a few moments, it’s still unnecessary overhead: you shouldn’t need to spin up an Airflow scheduler, webserver, and workers to determine that your DAG code doesn’t have the right syntax. Another problem is that the feedback the Airflow CLI provides is cryptic: it tells you what went wrong at the point of failure – but then stops running. To see all errors in your DAG or task, you have to fix each error as it renders and restart the process from the beginning.

This means that it can take hours to find and fix errors across all of your Airflow DAGs and tasks.

Testing a Dag with astro dev run dags test

The Benefits of Astro CLI Dev Parse

To get around this, some users create different kinds of custom testing frameworks. These are less than ideal for a few reasons, not least because they’re difficult to reproduce on a consistent basis. (They’re also difficult to maintain – i.e., to keep secure and up to date.) These and other issues prompted the team at Astronomer to develop a first-class local testing framework for Airflow users.

With the Astro CLI’s new astro dev parse command, you can parse your DAGs for import and syntax errors in seconds. astro dev parse quickly lets you know if your DAGs and tasks will render correctly in the Airflow UI. When it detects an error, it alerts you to what it is and where it occurs in your code. Given that it’s unwise to store connections with sensitive credentials locally, astro dev parse only parses your code. It doesn’t identify missing environment variables or connections.

Using your editor of choice, you can quickly go to your code, make your changes, and run astro dev parse again. Assuming the changes you made don’t introduce new errors, your DAGs should render in Airflow. Once you’re satisfied, and if applicable, you can use the Astro CLI to deploy them to prod.

Speaking of which, the Astro CLI’s astro deploy command now incorporates the astro dev parse functionality, so it parses your DAG code during deployment. This quick test ensures that you don’t push import/syntax errors to your cloud deployment. Specifically the astro deploy command builds your DAGs into an image, parses your DAGs, and deploys the image. This adds a new level of assurance to the development process and improves the reliability of your deploys. The quick test will not stop deploys due to missing environmental variables or connections. (This is a security-conscious feature, not a limitation or shortcoming.) Now you can ensure that your code is error-free when you deploy!

Successful Test of all Dags with astro dev parse

The Astro CLI’s New Local Pytest Testing Framework

Traditionally, the Astro CLI provided a directory hierarchy for your DAGs, plugins, and requirements, but did not have an “opinion” with respect to how you should organize your pytests, much less when you should test them on your DAGs. In other words, you could put your pytests anywhere, and you could opt not to run them at all. This was needlessly chaotic: the kind of oversight (verging on benign neglect) that makes reproducing and maintaining an Airflow dev environment needlessly difficult.

By default, the Astro CLI now puts all of your pytests in a ./tests sub-directory in your local dev environment. This directory is in the PATH of the Astro CLI’s new astro dev pytest command, which quickly spins up an Airflow environment you can use to run your tests. If you run astro dev pytest without any arguments, it automatically searches the ./tests subdirectory and runs any tests it finds.

You can also have astro dev pytest run specific pytests. However you use it, astro dev pytest gives you a simple, dynamic, frustration-free way to test your DAGs, as well as your custom Python code, tasks, operators, etc. At Astronomer, astro dev pytest has made Python testing much more enjoyable.

Or, at the very least, much less frustrating!

Finding a Syntax Error with astro dev pytest

Run Your Pytests Prior to Deploying Your DAGs

The astro deploy command now gives users the option to run their Pytests before deploying to production. This feature allows you to create custom CI tests that are tailored to your code and your DAGs. Running these tests before you deploy DAGs, tasks, or code will ensure you don’t accidentally take down existing pipelines. This amounts to a customizable layer of security that keeps your pipelines healthy.

Successful astro deploy with Custom Pytests

If you haven’t used the Astro CLI before, check it out! We’re confident that it’s the fastest way to get started with Airflow. You can start with our essential Astro CLI Quickstart guide.