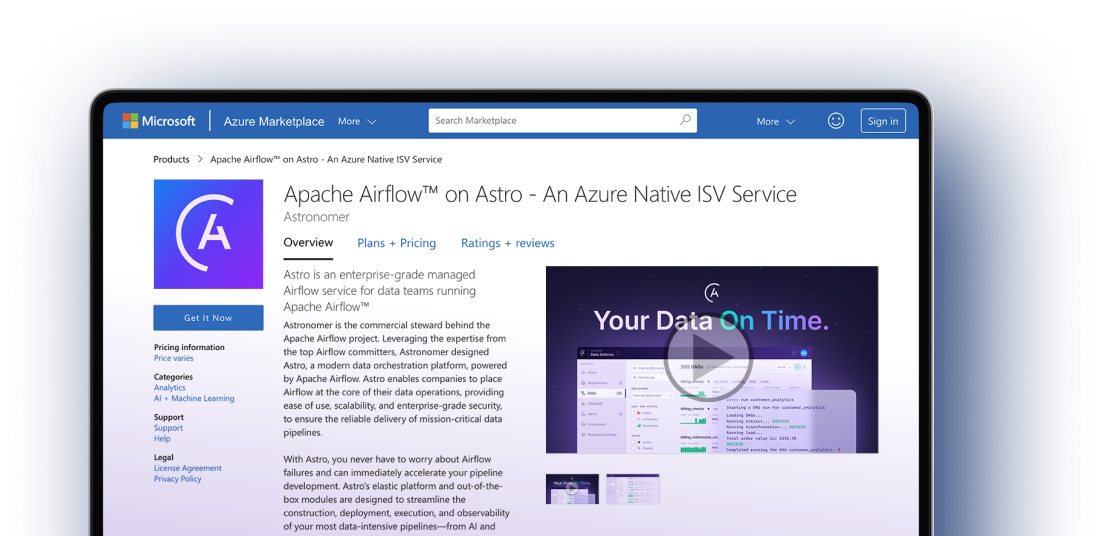

Unlock Seamless Airflow Workflow Orchestration on Azure with Astro

Experience a stress-free approach to Airflow workflow deployment and management in Azure with our fully-managed solution.

Discover a new level of power with Astro on Azure

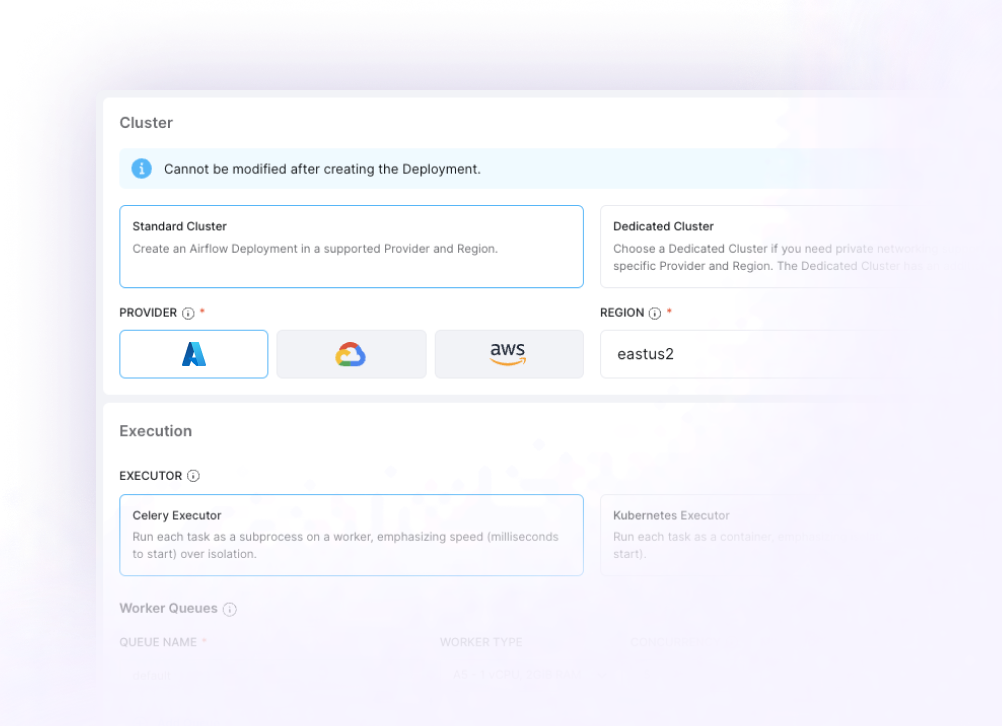

As an Azure-native service, Astro simplifies the entire process of getting started, deploying, and managing Airflow.

Deliver data on time

and at scale

Fine-tune your orchestration environment for optimal performance with a wide range of scheduler and worker configurations exclusively available through Astro. With built-in autoscaling, Astro automatically adapts to your workload, ensuring reliability and cost efficiency at all times.

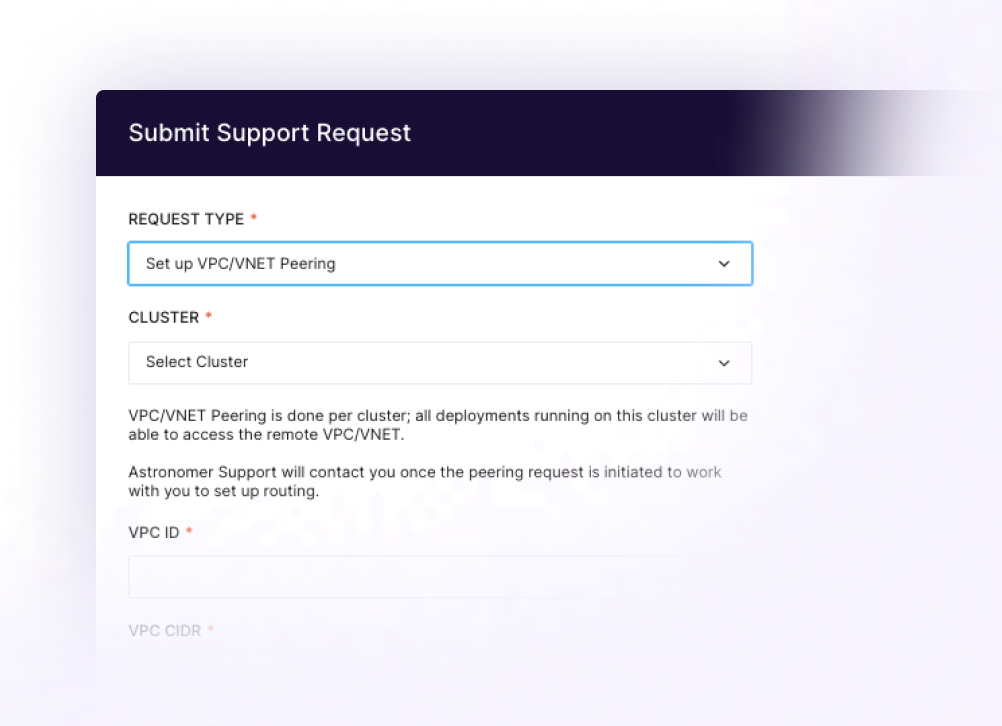

Secure your data with Private Networking in Azure

Ensure the highest level of security and privacy for your data orchestration with Astro’s unique private networking capabilities. As a native solution to your Azure environment, Astro establishes a secure and controlled data ecosystem, providing you with peace of mind and complete data protection.

Unify data across cloud providers and environments

Empower your teams to effortlessly build, run, and grow data pipelines that integrate data from diverse sources distributed across multiple cloud providers and environments. Astro ensures that your pipelines deliver reliable data, on time.

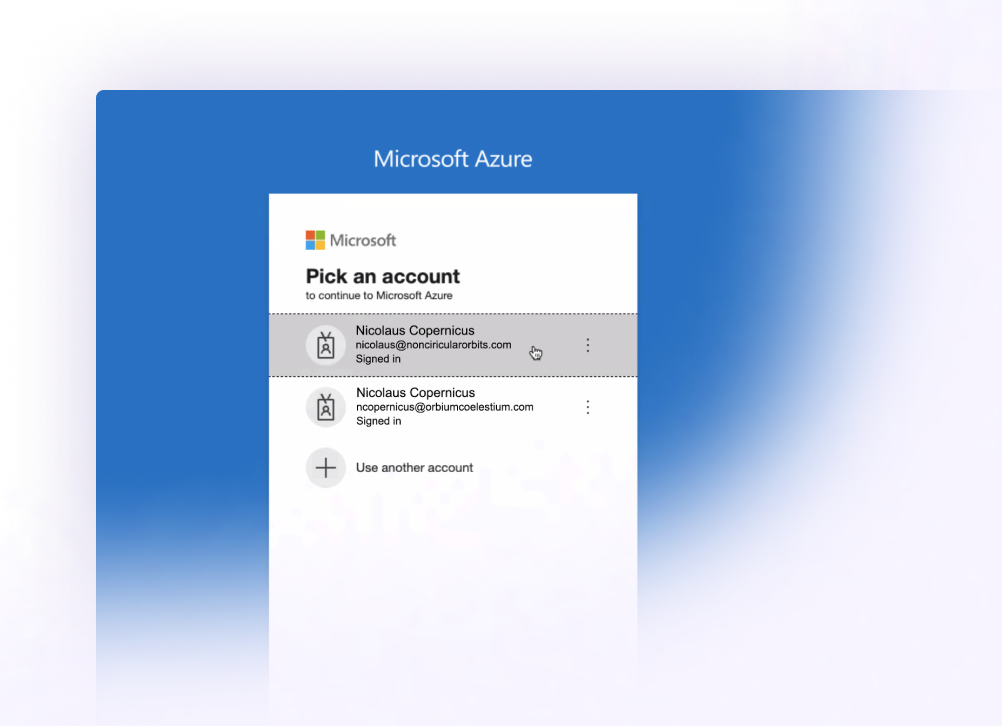

Simplify integration

and setup

Enjoy the convenience of 1-click signup and seamless integration tailored to your existing Azure ecosystem. Plus, Astro automatically utilizes your existing Azure credits, saving you money while simplifying your operations.

Get started with Astro on Azure

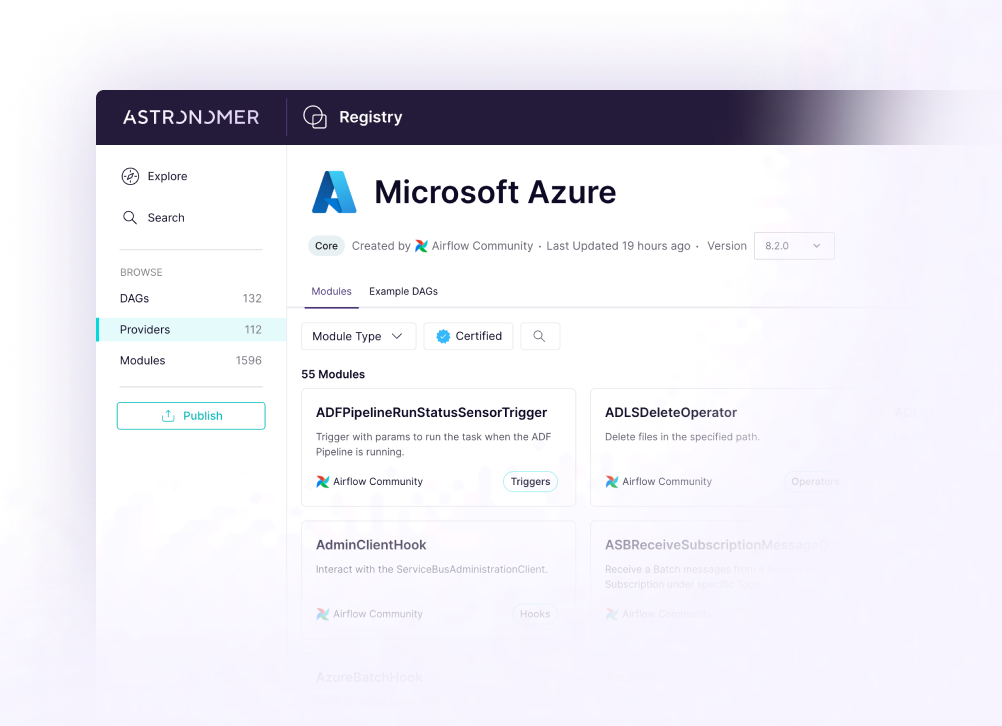

Accelerate your workflow development

Easily discover and access Azure-compatible operators, hooks, and modules to modernize your data stack.

DAG that shows how to run an ADF pipeline from Airflow

This can be a helpful pattern to follow if you have users that feel more comfortable using ADF or if you have pre-existing pipelines already there

![]() Airflow Community

Airflow Community

Call Azure Data Factory Pipelines with Airflow

Trigger pre-existing Azure Data Factory pipelines with Airflow.

![]() Airflow Community

Airflow Community

Azure Data Explorer and Airflow

Shows how to call AzureDataExplorer from Airflow using the AzureDataExplorer Operator.

![]() Airflow Community

Airflow Community

Using Astro's Apache Airflow® offering on Azure has modernized our data operations. Their best-in-class SLAs, multi-environment deployments, and intuitive dashboards have streamlined our processes, ensuring we can manage our critical pipelines.

Kevin Schmidt

Sr Manager, Data Engineering

Molson Coors Beverage Company