Airflow in Action: 5.5K Pipelines, 200 Models, 10% of the World’s Web Sites. MLOps Insights From Wix

4 min read |

At this year’s Airflow Summit, Wix detailed how they leverage Apache Airflow as the backend for their machine learning (ML) platform, extending its use beyond traditional pipeline scheduling.

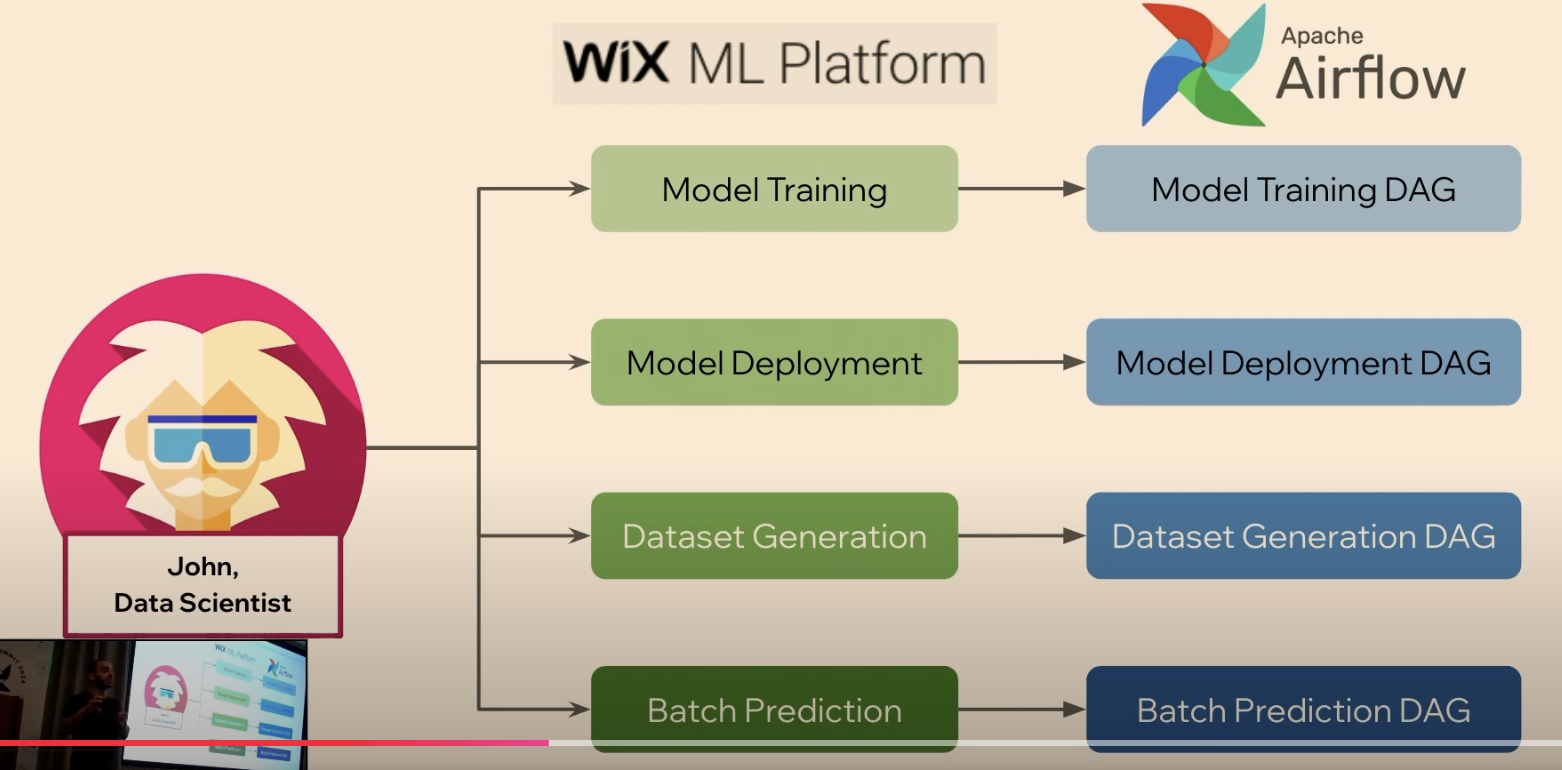

Elad Yaniv, Data and MLOps Engineer at Wix, outlined how the ML platform uses Airflow to streamline ML workflows, enabling data scientists to focus on model development while automating operational tasks.

Overview of Wix and Its ML Platform

Wix is one of the world’s largest website-building platforms, powering an estimated 10% of the internet. Wix has over 250m users in 190 countries, with 85,000 new sites created per day.

Supporting website operations, Wix has invested heavily in its data science practice, running over 200 machine learning models daily for tasks such as fraud detection, text embeddings, and classification.

Enabling these workflows, Wix developed an in-house ML platform—a centralized solution for data scientists to train, deploy, and run their models—using Apache Airflow® as the backend. Wix’s ML platform runs 5,500 Airflow pipelines and 12,000 tasks every day.

How Wix Uses Airflow in Its ML Platform

MLOps is one of the fastest growing use cases for Airflow. Wix uses Airflow’s API and Python libraries to integrate it seamlessly into their ML platform, supporting three main roles:

Triggering DAGs On Demand

Wix’s DAGs are configured with schedule_interval=None, making them exclusively on-demand. Jobs such as model training, dataset generation and enrichment, or prediction runs are initiated from the ML platform.

Behind the scenes, these jobs are converted into DAGs and triggered using Airflow’s REST API. The DAGs are configured dynamically based on user input, such as model IDs or data parameters, ensuring flexibility for a variety of workflows.

A typical workflow involves the user submitting a job through the ML platform, which sends a request to Airflow’s API with the relevant DAG ID and configuration. The job status and updates are relayed back to the ML platform, enabling seamless interaction for the user.

Figure 1: Each job a data scientist wants to run in the ML platform triggers an Airflow DAG behind the scenes. Image source.

Note that the upcoming Apache Airflow 3 release offers additional enhancements to MLOps workloads, including:

- Support for advanced AI inference on-demand execution.

- Extended MLOps support for backfills as models evolve.

Canceling DAGs Programmatically

To handle errors or changes in requirements, Wix developed a cancellation mechanism using Airflow’s Python libraries. Users can cancel jobs directly from the ML platform, which triggers a dedicated “cancellation DAG.” This DAG identifies the target DAG run, retrieves unfinished tasks using its task instance and state, and sets their status to failed.

Programmatic cancellation avoids resource wastage and ensures efficient error handling without requiring users to interact directly with the Airflow UI.

Monitoring DAGs in Real-Time

DAG monitoring is a critical component of the ML platform’s reliability. Wix uses Kafka to report the status of each DAG task. Each task sends updates, including metadata, to a Kafka topic via success and failure callbacks. These updates are consumed by the platform and displayed in real-time within the ML platform’s UI. Users can monitor task progress, view error messages, and link directly to Airflow for further details.

Additionally, Wix employs monitoring DAGs for broader pipeline visibility. For instance, one monitoring DAG identifies paused or inactive DAGs and alerts the relevant owners via Slack. This ensures that critical workflows remain operational and helps optimize performance by identifying bottlenecks or recurring issues.

Note that since the Airflow Summit, Astronomer has released Astro Observe as an additional monitoring option for Apache Airflow. Astro Observe brings rich visibility and actionable intelligence to data pipelines.

Unlocking Airflow’s Potential

Wix’s implementation demonstrates how Airflow can be used beyond traditional scheduling to streamline and automate machine learning workflows. By leveraging Airflow’s API and Python libraries, Wix has created a scalable solution for data scientists, abstracting operational complexity while maintaining visibility and control.

For teams looking to expand Airflow’s role in their data operations (DataOps), Wix’s approach offers a proven blueprint for integrating Airflow into complex machine learning systems.

Next Steps

Explore Wix’s innovative use of Airflow in machine learning. Watch the full session replay Elevating Machine Learning Deployment: Unleashing the Power of Airflow in Wix’s ML Platform to learn more.

The upcoming Airflow 3 release further expands the flexibility engineering teams have in running their data pipelines — whether they run those on regular schedules, on-demand, or with event-driven, reactive execution. The best way to get started with Airflow for MLOps or other workflow orchestration use cases, is on Astro, the industry’s leading Airflow managed service. You can try Astro for free here.