Airflow in Action: $160BN in Assets, 4,000 Data Pipelines. Financial Workflow Insights from Robinhood

4 min read |

Robinhood Markets, Inc. transformed financial services by introducing commission-free stock trading, democratizing access to the markets for millions of investors. Today, Robinhood lets customers trade stocks, options, commodity interests, and crypto, invest for retirement, and earn with Robinhood Gold. As of Oct 31, 2024, Robinhood is serving over 24m customers and has $160Bn in assets under management.

Robinhood’s Optimizing Critical Operations session at this year’s Airflow Summit explored how Apache Airflow® supports its trading and operational workflows at scale. This talk detailed the architecture, development environments, migration to Airflow 2, and future plans, showcasing how Airflow delivers reliability and flexibility for mission-critical processes.

Airflow Use Cases at Robinhood

Robinhood relies on Airflow for diverse use cases across trading, clearing, money movement, and backend services.

- Key trading-related workflows include charging margin interest daily, processing stock options after expiration, and managing cost basis adjustments.

- Clearing workflows ensure equity settlements are completed on T+1 days, validate corporate actions like stock splits, and maintain accuracy for crypto holdings.

Additionally, Airflow powers thousands of daily transactions between banks and Robinhood accounts, report generation, and backend data ingestion tasks, such as populating internal search engines. This extensive usage underpins Robinhood’s ability to meet the operational demands of a modern, cloud-native brokerage

Airflow Architecture: Reliability at Scale

To handle these workflows, Robinhood employs a multi-cluster Airflow architecture with 15 clusters supporting its different business domains. Each cluster integrates deeply with backend services, deploying tightly coupled Airflow workers alongside business logic. Kubernetespowers the infrastructure, with SQS managing task queuing and PostgreSQL serving as the metadata store.

Figure 1: Robinhood’s Airflow architecture. Central schedulers are responsible for workloads from multiple teams, with tasks themselves run on workers owned by each team Image source.

High availability is ensured by deploying clusters across multiple Kubernetes environments and leveraging multi-AZ setups. To prevent disruptions, synchronizing DAG deployments to align with backend service versions, minimizing downtime and inconsistencies.

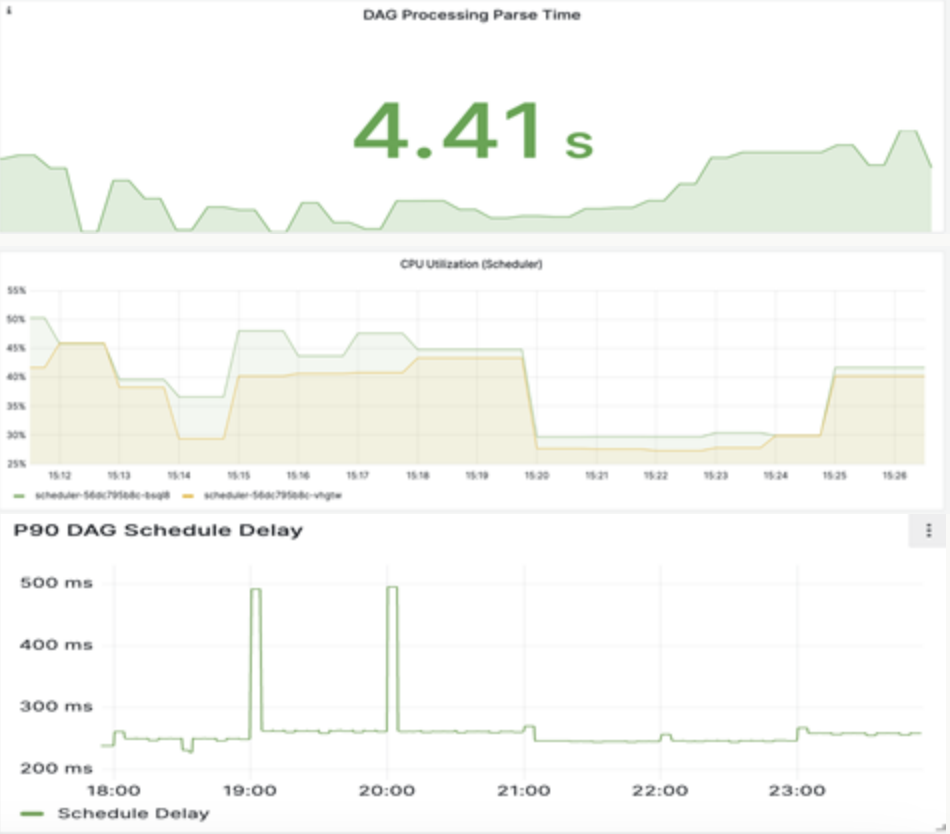

Furthermore, Robinhood augmented Airflow with several customizations for enhanced observability and maintaining SLAs for key metrics such as DAG parsing time, DAG deployments, scheduler delays, and task instance failure rates. They also used a custom metrics plugin to fill-in gaps for key metrics and a collection of operators, and sensors to tailor Airflow monitoring to their exact needs.

Multi-Environment Airflow Platform

Robinhood’s multi-environment platform provides seamless developer experiences with environments for development, testing, and production. The company’s Apollo test platform replicates production architectures using local stack simulations for end-to-end testing. A separate load testing environment generates stress scenarios to evaluate cluster reliability under high loads, analyzing metrics like DAG parsing times and CPU utilization.

Figure 2: Tracking key metrics during stress testing of new pipelines. Image source.

This multi-environment setup ensures reliability while enabling robust testing and change management, critical for a high-stakes financial ecosystem.

Migration to Airflow 2 and Future Plans

Robinhood’s migration from Airflow 1.10 to Airflow 2 marked a significant upgrade, involving its 15 clusters, 75 workers, and over 4,000 data pipelines. The migration was executed methodically, using a side-by-side approach to transition workloads without downtime. Challenges included updating task dependencies, ensuring cross-platform compatibility, and maintaining SLAs for critical workflows tied to stock market timings.

Figure 3: Sizing the challenges of Robinhood’s Airflow migration. Image source.

Custom tools automated much of the migration, reducing effort and ensuring smooth transitions. For instance, an S3-based operator coordinated task status across old and new environments, while automation frameworks streamlined DAG migrations across clusters.

Looking ahead, Robinhood aims to enhance Airflow’s integration with its internal authentication system, implementing in-place upgrades with zero downtime and building data backfill servers as pipelines change

Next Steps

Robinhood’s journey underscores the transformative role Airflow plays in powering complex financial workflows. Watch the session replay Optimizing Critical Operations: Enhancing Robinhood’s Workflow Journey with Airflow to learn more.

Looking forward to the upcoming Airflow 3, there are a number of enhancements that will serve data platform teams with similar requirements to Robinhood:

- A decoupling of the Airflow architecture will enable workers to be deployed to any cloud, on-prem data center, hybrid or edge environment, while delivering even higher security isolation, resilience and scalability.

- Task isolation provides stronger security and simplified multi-tenancy with tasks no longer having direct access to the Airflow metadatabase.

- Integrated with the scheduler to balance with DAG execution, backfills will run more predictability and at scale.

The best way to get ready for Airflow 3 is to start out now with Astro, the leading Airflow managed service from Astronomer.io. With Astro, your organization will be ready to unlock the latest in Airflow with in-place upgrades and seamless rollbacks. You can get started with Astro for free here.