Airflow in Action: Scaling Insights from Bosch and 1.2 Million Pipeline Runs Per Day

3 min read |

The Bosch Group is a leading global supplier of technology and services with sales of €91.6 billion ($100 billion) in 2023. It employs ~430,000 people around the world of which nearly 50,000 are software engineers. The company’s operations are divided into four business sectors: Mobility, Industrial Technology, Consumer Goods, and Energy and Building Technology. The company uses technology and data to shape trends that impact all of us at home or at work — automation, electrification, digitalization, connectivity, and sustainability.

Handling 1.2 million pipeline runs per day, Apache Airflow® is central to data-driven applications and analytics at Bosch. However, it’s not always been this way. At his talk at the Airflow Summit, Jens Scheffler, Technical Architect for Digital Testing Automation at Bosch, provided a deep dive into how Bosch tuned its Airflow setup, along with best practices and anti-patterns observed along the way.

Bosch’s Scaling Journey: From 1,000 to 50,000 Runs Per Hour

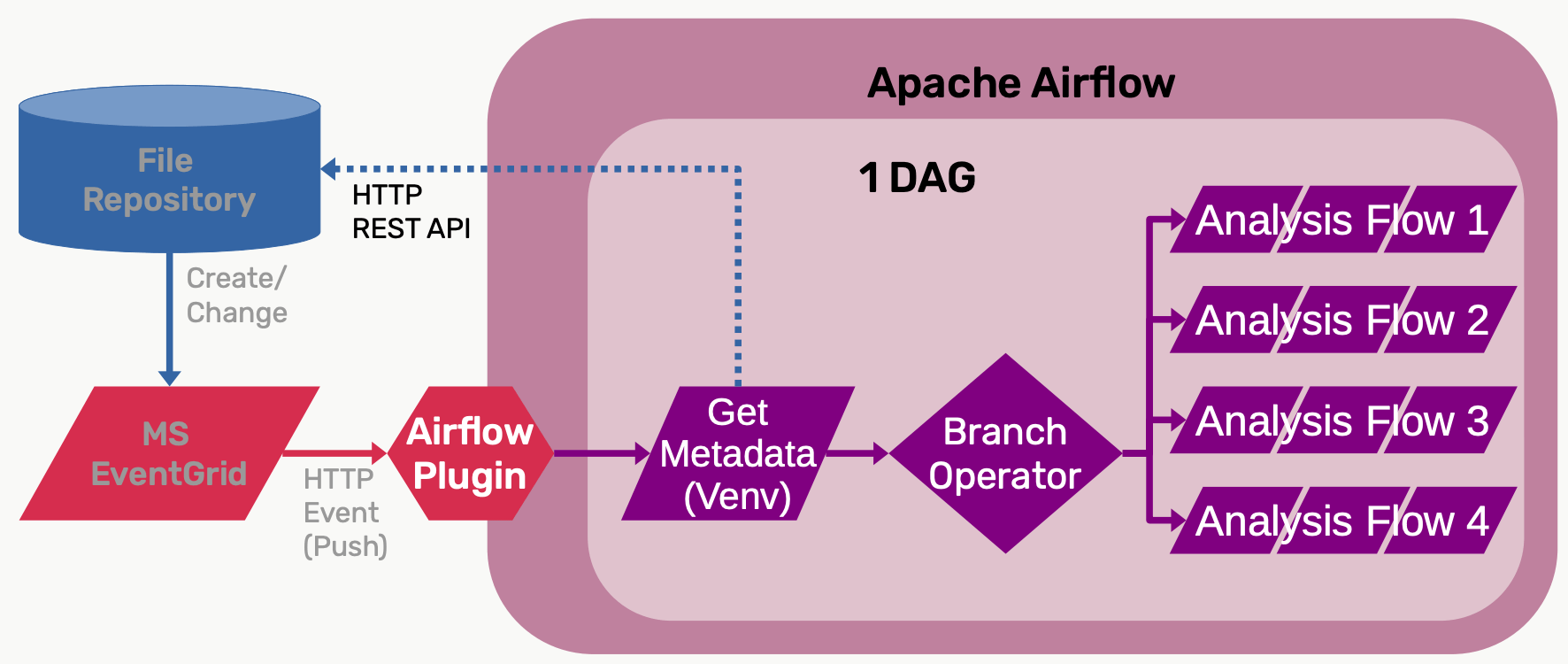

Most of the data that Jen’s team uses is file-based, and they used MS Event Grid to trigger file changes from their file repository into Airflow. The initial Airflow environment at Bosch was sized to handle 1,000 DAG runs per hour. Using an event-driven pattern, data was ingested from Bosch’s internal file repository to the Microsoft Azure Event Grid pubsub service and then into Airflow. However, a series of event storms meant tasks queued, impacting freshness of data outputs. As these event storms became more frequent, the team at Bosch had to prioritize Airflow scale.

Figure 1: Data-driven pipeline architecture at Bosch. Image source.

In his session, Jens outlined the iterations the team went through, including:

- Throwing hardware at the problem: Tuning the maximum number of active DAG runs and tasks along with the number of concurrent task instances, then scaling the number of schedulers, workers, and database instance size.

- Upgrading and tuning config parameters: Developing patches to both the scheduler and the Python virtual environment operator. All of these patches have been contributed to the Airflow project, landing in versions 2.6 through to 2.8.

- DAG and Environment optimizations: including custom policies for archiving of DAG events (7 days retention + daily clean), event filtering and batched event support, along with HTTP rate limits.

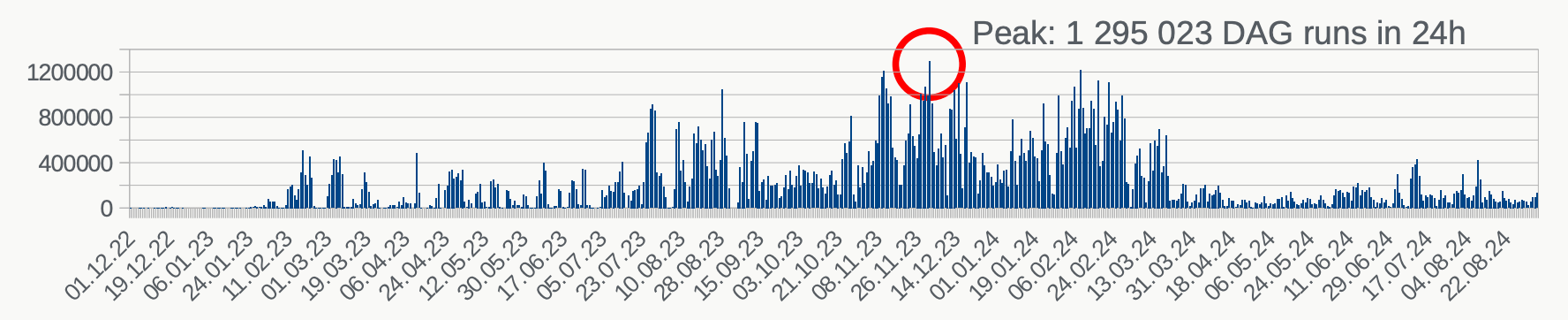

Figure 2 DAG run metrics from the Airflow deployment at Bosch. Image source.

Today, Bosch has scaled Airflow for 50,000 DAG runs per hour, though this has peaked as high as 70,000! Airflow processes an average 1.7 million events per day with single second latency (down from 5 seconds).

Next steps

To dig into the details of Bosch’s scaling journey, watch the session replay How we Tuned our Airflow to Make 1.2 Million DAG Runs Per Day!

The fastest way to scale Airflow is by building and running your pipelines on the Astro managed service. You can get started by evaluating Astro for free today.