Airflow in Action: 33% Faster Deploys with 90% Higher Data Quality. Astronomer at Autodesk

3 min read |

At the Airflow Summit 2024, Bhavesh Jaisinghani, Data Engineering Manager at Autodesk shared how the company had transformed testing of their data pipelines by creating a secure, production-like UAT environment with Astronomer and Apache Airflow®. Attendees learned how this setup enabled seamless testing of Spark-based workflows, reduced development cycles, and ensured data security for sensitive PII.

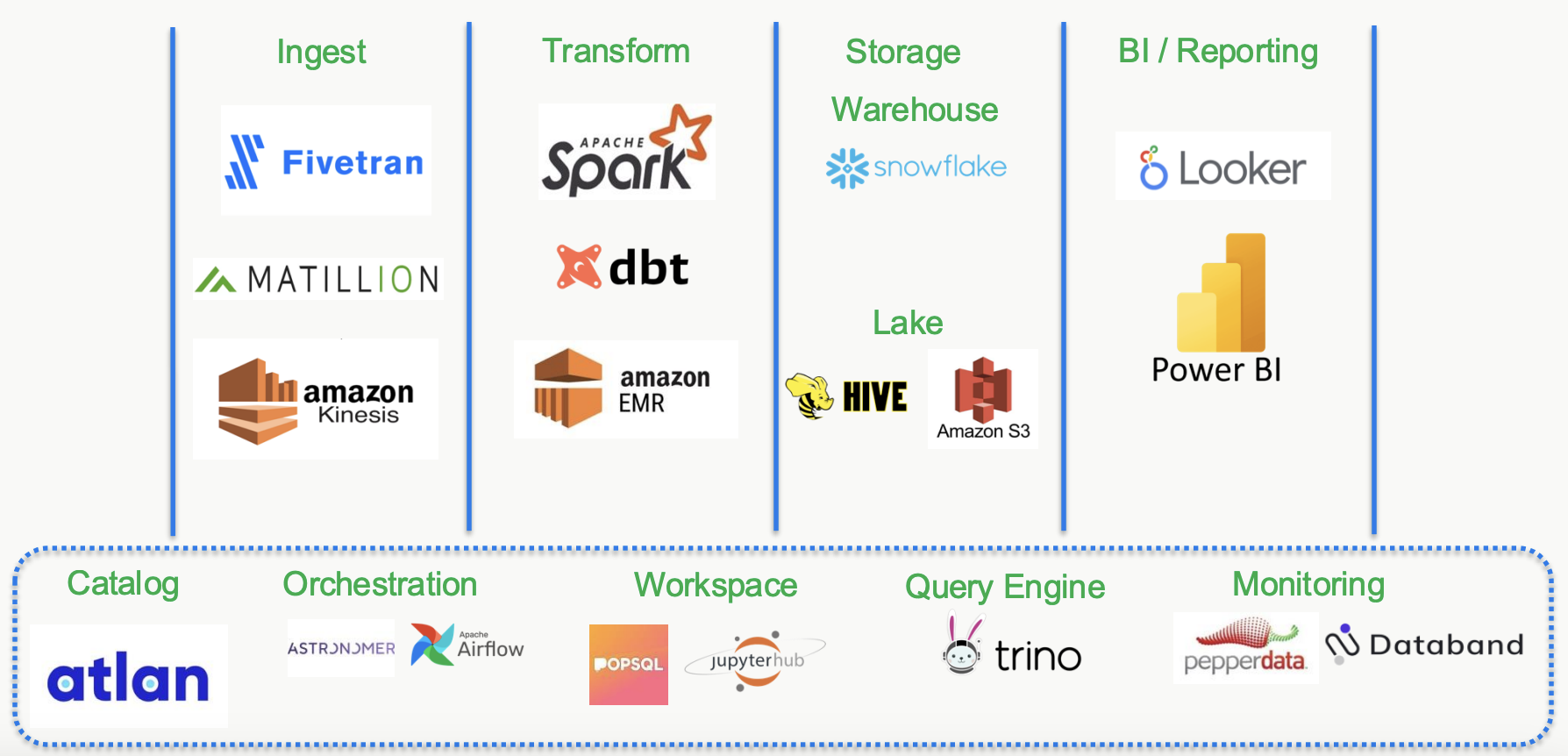

Autodesk’s Data Stack

Autodesk’s data platform consists of four primary layers: ingestion, transformation, data warehousing, and BI / reporting. Bhavesh’s team focuses on the transformation-to-BI layers, relying heavily on Apache Spark® for data processing, with Apache Hive and Amazon S3 used for data cataloging and storage.

Figure 1: Autodesk’s tech stack, underpinned by Astronomer and Airflow for orchestration. Image source.

The BI stack includes Trino for querying with Looker and PowerBI for data visualization. Autodesk leverages Astro, Astronomer’s managed Airflow platform to orchestrate workflows across a multi-tenant setup with development, staging, and production environments.

Challenges in Pipeline Testing

Autodesk’s workflows are underpinned by dynamic business rules and large-scale data processing with stringent security requirements governing how sensitive data such as PII is handled. It was these requirements that created the most significant issues during pipeline testing. Copying production data to dev and test environments required multiple internal approvals.

The consequences to the business included long dev/test cycles and a fragmented developer experience. This was often because engineers had to fall back on incomplete local data sets during testing due to the inaccessibility of production data.

Creating the Ideal UAT Environment

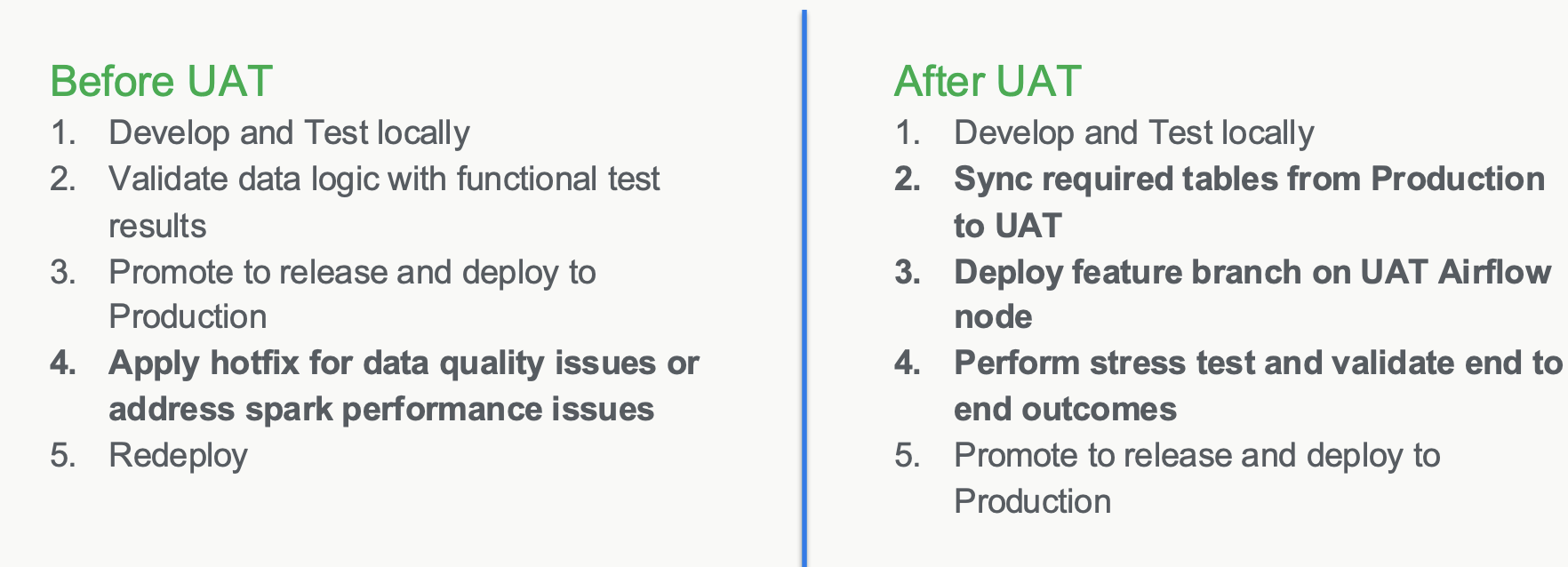

To address these challenges, Autodesk implemented a UAT (User Acceptance Testing) environment mirroring production. Key features include:

- Separate Infrastructure: A dedicated Amazon EMR (Elastic MapReduce) cluster and Hive schema ensured testing imposed no impact on production workflows.

- Granular Access Control: Astronomer workspaces enabled isolated developer environments, preventing accidental writes to production.

- Data Sync Utilities: Automated tools allowed selective synchronization of production data to UAT, preserving privacy while enabling testing.

- CI/CD Integration: Standardized pipelines supported seamless deployment to UAT, reducing manual errors and increasing testing efficiency.

Astronomer’s advanced features built around Airflow play a critical role in enabling the new UAT environment, providing scalability, tenant-level deployments, and integrated CI/CD workflows.

Figure 2: Contrasting the development flow pre and post UAT implementation. Image source.

UAT Environment: Metrics and Outcomes

Implementing the UAT environment delivered impressive results to the business:

- Error Reduction: Improved data quality by 90%, minimizing downstream issues.

- Faster Deployments: Production deployment cycles were reduced by 33%.

- Technical Debt Reduction: Spark job optimization time decreased by 60%.

- Improved Infrastructure Testing: Supported smooth migrations, including Spark and EMR upgrades.

Next Steps

Autodesk’s innovative approach, powered by Astronomer and Airflow, showcases how secure, scalable UAT environments enable faster, more reliable pipeline development. To learn more, watch Bhavesh’s full session Scale and Security: How Autodesk Securely Develops and Tests PII Pipelines.

You can read more about data engineering at Autodesk in our blog post Autodesk’s Data Engineering Transformation with Astronomer and Apache Airflow.

Ready to transform your data workflows like Autodesk did? Signup for a Free Trial of Astro today to learn how we can help you scale your data engineering efforts with ease.